Build effective agents with Model Context Protocol using simple, composable patterns.

Examples | Building Effective Agents | MCP

mcp-agent is a simple, composable framework to build agents using Model Context Protocol.

Note

mcp-agent's vision is that MCP is all you need to build agents, and that simple patterns are more robust than complex architectures for shipping high-quality agents.

mcp-agent gives you the following:

- Full MCP support: It fully implements MCP, and handles the pesky business of managing the lifecycle of MCP server connections so you don't have to.

- Effective agent patterns: It implements every pattern described in Anthropic's Building Effective Agents in a composable way, allowing you to chain these patterns together.

- Durable agents: It works for simple agents and scales to sophisticated workflows built on Temporal so you can pause, resume, and recover without any API changes to your agent.

Altogether, this is the simplest and easiest way to build robust agent applications.

We welcome all kinds of contributions, feedback and your help in improving this project.

import asyncio

from mcp_agent.app import MCPApp

from mcp_agent.agents.agent import Agent

from mcp_agent.workflows.llm.augmented_llm_openai import OpenAIAugmentedLLM

app = MCPApp(name="hello_world")

async def main():

async with app.run():

agent = Agent(

name="finder",

instruction="Use filesystem and fetch to answer questions.",

server_names=["filesystem", "fetch"],

)

async with agent:

llm = await agent.attach_llm(OpenAIAugmentedLLM)

answer = await llm.generate_str("Summarize README.md in two sentences.")

print(answer)

if __name__ == "__main__":

asyncio.run(main())

# Add your LLM API key to `mcp_agent.secrets.yaml` or set it in env.

# The [Getting Started guide](https://docs.mcp-agent.com/get-started/overview) walks through configuration and secrets in detail.|

Connect LLMs to MCP servers in simple, composable patterns like map-reduce, orchestrator, evaluator-optimizer, router & more. |

Create MCP servers with a FastMCP-compatible API. You can even expose agents as MCP servers. |

|

Core: Tools ✅ Resources ✅ Prompts ✅ Notifications ✅ |

Scales to production workloads using Temporal as the agent runtime backend without any API changes. |

|

Beta: Deploy agents yourself, or use mcp-c for a managed agent runtime. All apps are deployed as MCP servers. |

mcp-agent's complete documentation is available at docs.mcp-agent.com, including full SDK guides, CLI reference, and advanced patterns. This readme gives a high-level overview to get you started.

llms-full.txt: contains entire documentation.llms.txt: sitemap listing key pages in the docs.- docs MCP server

- Overview

- Minimal example

- Quickstart

- Why mcp-agent

- Core concepts

- Workflow patterns

- CLI reference

- Authentication

- Advanced

- Cloud deployment

- Examples

- FAQs

- Community & contributions

Tip

The CLI is available via uvx mcp-agent.

To get up and running,

scaffold a project with uvx mcp-agent init and deploy with uvx mcp-agent deploy my-agent.

You can get up and running in 2 minutes by running these commands:

mkdir hello-mcp-agent && cd hello-mcp-agent

uvx mcp-agent init

uv init

uv add "mcp-agent[openai]"

# Add openai API key to `mcp_agent.secrets.yaml` or set `OPENAI_API_KEY`

uv run main.pyWe recommend using uv to manage your Python projects (uv init).

uv add "mcp-agent"Alternatively:

pip install mcp-agentAlso add optional packages for LLM providers (e.g. uv add "mcp-agent[openai, anthropic, google, azure, bedrock]").

Tip

The examples directory has several example applications to get started with.

To run an example, clone this repo (or generate one with uvx mcp-agent init --template basic --dir my-first-agent)

cd examples/basic/mcp_basic_agent # Or any other example

# Option A: secrets YAML

# cp mcp_agent.secrets.yaml.example mcp_agent.secrets.yaml && edit mcp_agent.secrets.yaml

uv run main.pyHere is a basic "finder" agent that uses the fetch and filesystem servers to look up a file, read a blog and write a tweet. Example link:

finder_agent.py

import asyncio

import os

from mcp_agent.app import MCPApp

from mcp_agent.agents.agent import Agent

from mcp_agent.workflows.llm.augmented_llm_openai import OpenAIAugmentedLLM

app = MCPApp(name="hello_world_agent")

async def example_usage():

async with app.run() as mcp_agent_app:

logger = mcp_agent_app.logger

# This agent can read the filesystem or fetch URLs

finder_agent = Agent(

name="finder",

instruction="""You can read local files or fetch URLs.

Return the requested information when asked.""",

server_names=["fetch", "filesystem"], # MCP servers this Agent can use

)

async with finder_agent:

# Automatically initializes the MCP servers and adds their tools for LLM use

tools = await finder_agent.list_tools()

logger.info(f"Tools available:", data=tools)

# Attach an OpenAI LLM to the agent (defaults to GPT-4o)

llm = await finder_agent.attach_llm(OpenAIAugmentedLLM)

# This will perform a file lookup and read using the filesystem server

result = await llm.generate_str(

message="Show me what's in README.md verbatim"

)

logger.info(f"README.md contents: {result}")

# Uses the fetch server to fetch the content from URL

result = await llm.generate_str(

message="Print the first two paragraphs from https://www.anthropic.com/research/building-effective-agents"

)

logger.info(f"Blog intro: {result}")

# Multi-turn interactions by default

result = await llm.generate_str("Summarize that in a 128-char tweet")

logger.info(f"Tweet: {result}")

if __name__ == "__main__":

asyncio.run(example_usage())mcp_agent.config.yaml

execution_engine: asyncio

logger:

transports: [console] # You can use [file, console] for both

level: debug

path: "logs/mcp-agent.jsonl" # Used for file transport

# For dynamic log filenames:

# path_settings:

# path_pattern: "logs/mcp-agent-{unique_id}.jsonl"

# unique_id: "timestamp" # Or "session_id"

# timestamp_format: "%Y%m%d_%H%M%S"

mcp:

servers:

fetch:

command: "uvx"

args: ["mcp-server-fetch"]

filesystem:

command: "npx"

args:

[

"-y",

"@modelcontextprotocol/server-filesystem",

"<add_your_directories>",

]

openai:

# Secrets (API keys, etc.) are stored in an mcp_agent.secrets.yaml file which can be gitignored

default_model: gpt-4oThere are too many AI frameworks out there already. But mcp-agent is the only one that is purpose-built for a shared protocol - MCP.mcp-agent pairs Anthropic’s Building Effective Agents patterns with a batteries-included MCP runtime so you can focus on behaviour, not boilerplate. Teams pick it because it is:

- Composable – every pattern ships as a reusable workflow you can mix and match.

- MCP-native – any MCP server (filesystem, fetch, Slack, Jira, FastMCP apps) connects without custom adapters.

- Production ready – Temporal-backed durability, structured logging, token accounting, and Cloud deploys are first-class.

- Pythonic – a handful of decorators and context managers wire everything together.

Docs: Welcome to mcp-agent • Effective patterns overview.

Every project revolves around a single MCPApp runtime that loads configuration, registers agents and MCP servers, and exposes tools/workflows. The Core Components guide walks through these building blocks.

Initialises configuration, logging, tracing, and the execution engine so everything shares one context.

from mcp_agent.app import MCPApp

app = MCPApp(name="finder_app")

async def main():

async with app.run() as running_app:

logger = running_app.logger

logger.info("App ready", data={"servers": list(running_app.context.server_registry.registry)})Docs: MCPApp • Example: examples/basic/mcp_basic_agent.

Agents couple instructions with the MCP servers (and optional functions) they may call. AgentSpec definitions can be loaded from disk and turned into agents or Augmented LLMs with the factory helpers.

from pathlib import Path

from mcp_agent.agents.agent import Agent

from mcp_agent.workflows.factory import load_agent_specs_from_file

agent = Agent(

name="researcher",

instruction="Research topics using web and filesystem access",

server_names=["fetch", "filesystem"],

)

async with agent:

tools = await agent.list_tools()

async with app.run() as running_app:

specs = load_agent_specs_from_file(

str(Path("examples/basic/agent_factory/agents.yaml")),

context=running_app.context,

)Docs: Agents • Agent factory helpers • Examples: examples/basic/agent_factory.

Augmented LLMs wrap provider SDKs with the agent’s tools, memory, and structured output helpers. Attach one to an agent to unlock generate, generate_str, and generate_structured.

from pydantic import BaseModel

from mcp_agent.workflows.llm.augmented_llm import RequestParams

from mcp_agent.workflows.llm.augmented_llm_openai import OpenAIAugmentedLLM

class Summary(BaseModel):

title: str

verdict: str

async with agent:

llm = await agent.attach_llm(OpenAIAugmentedLLM)

report = await llm.generate_str(

message="Draft a 3-sentence release note from CHANGELOG.md",

request_params=RequestParams(maxTokens=400, temperature=0.2),

)

structured = await llm.generate_structured(

message="Return a JSON object with `title` and `verdict` summarising the README.",

response_model=Summary,

)Docs: Augmented LLMs • Examples: examples/basic/mcp_basic_agent and the workflow projects listed in gallery.md.

MCPApp decorators convert coroutines into durable workflows and tools. The same annotations work for both asyncio and Temporal execution.

from datetime import timedelta

from mcp_agent.executor.workflow import Workflow, WorkflowResult

@app.workflow

class PublishArticle(Workflow[WorkflowResult[str]]):

@app.workflow_task(schedule_to_close_timeout=timedelta(minutes=5))

async def draft(self, topic: str) -> str:

return f"- intro to {topic}\n- highlights\n- next steps"

@app.workflow_run

async def run(self, topic: str) -> WorkflowResult[str]:

outline = await self.draft(topic)

return WorkflowResult(value=outline)Docs: Decorator reference • Examples: examples/workflows.

Settings load from mcp_agent.config.yaml, mcp_agent.secrets.yaml, environment variables, and optional preload strings. Keep secrets out of source control.

# mcp_agent.config.yaml

execution_engine: asyncio

mcp:

servers:

fetch:

command: "uvx"

args: ["mcp-server-fetch"]

filesystem:

command: "npx"

args: ["-y", "@modelcontextprotocol/server-filesystem"]

openai:

default_model: gpt-4o-mini

# mcp_agent.secrets.yaml (gitignored)

openai:

api_key: "${OPENAI_API_KEY}"Docs: Configuration reference • Specify secrets.

Connect to existing MCP servers programmatically or aggregate several into one façade.

from mcp_agent.mcp.gen_client import gen_client

async with app.run():

async with gen_client("filesystem", app.server_registry, context=app.context) as client:

resources = await client.list_resources()

app.logger.info("Filesystem resources", data={"uris": [r.uri for r in resources.resources]})Docs: MCP integration overview • Examples: examples/mcp.

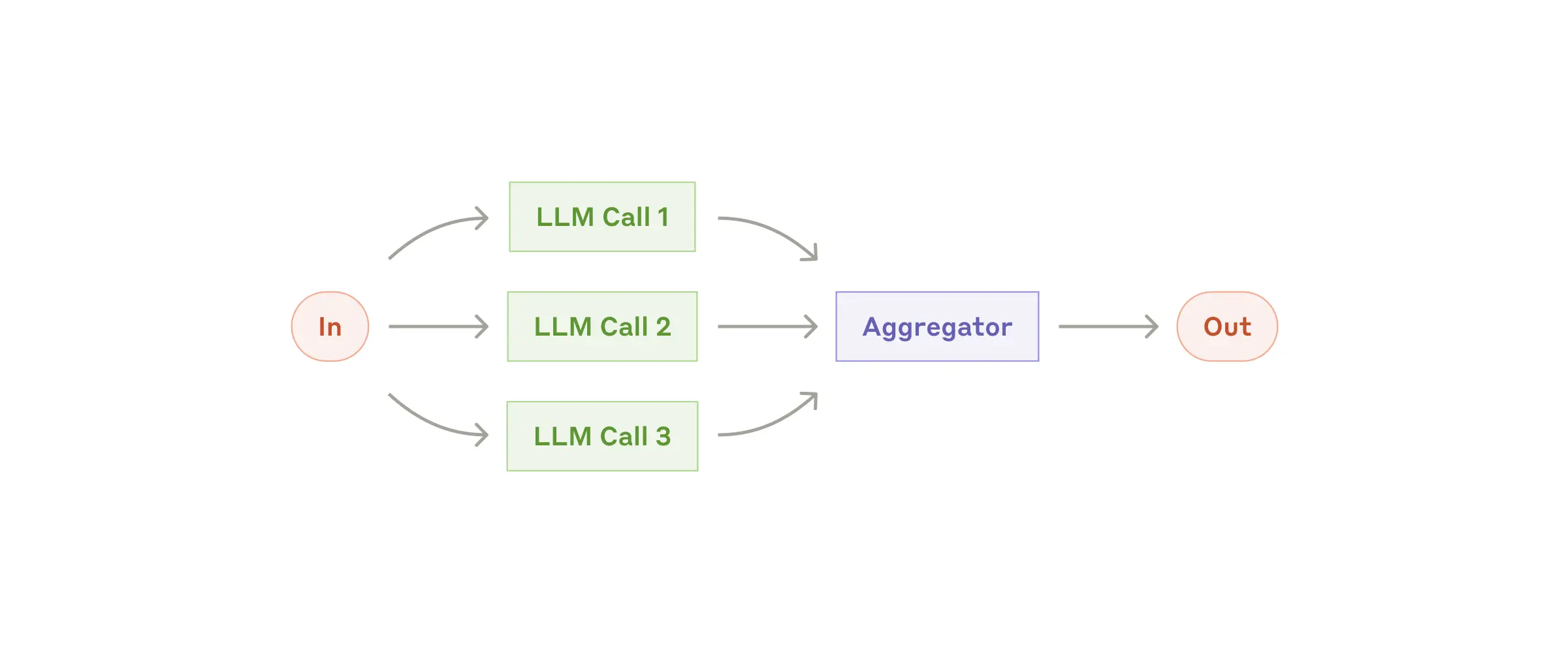

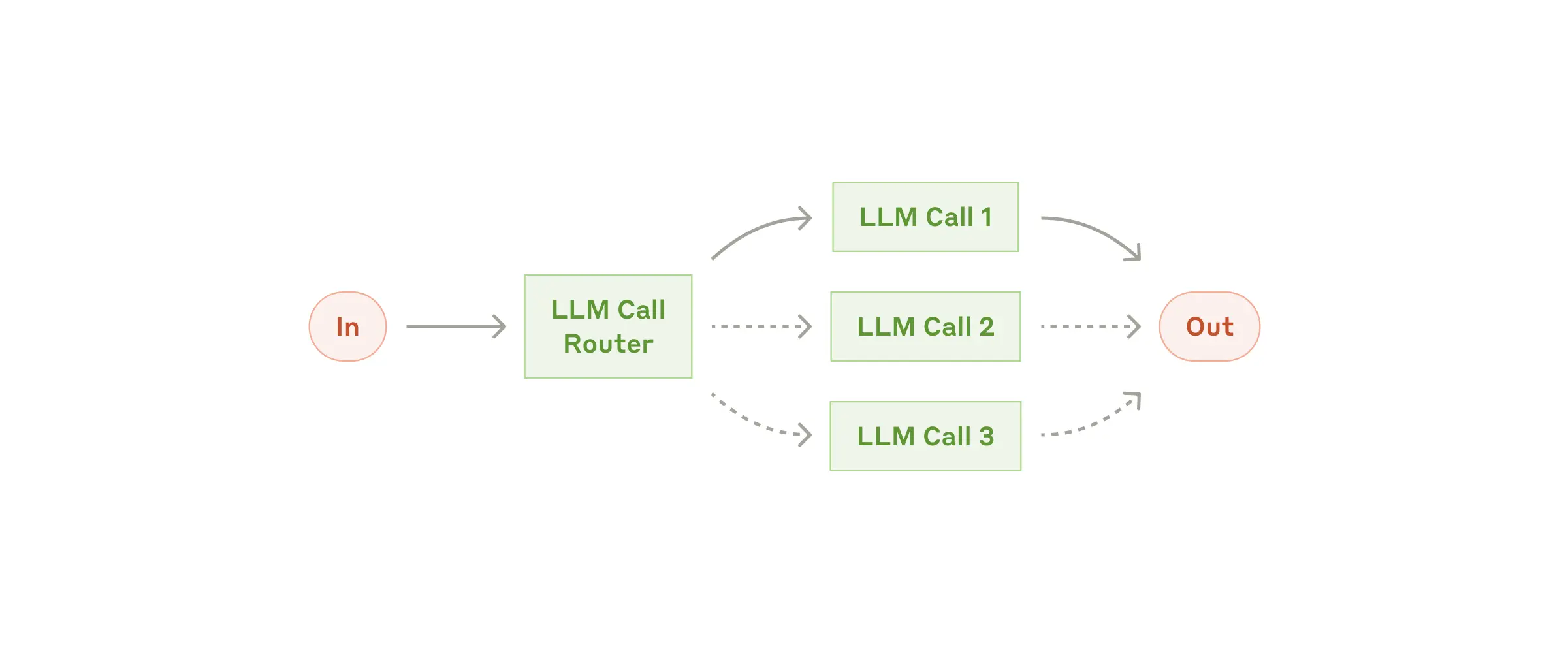

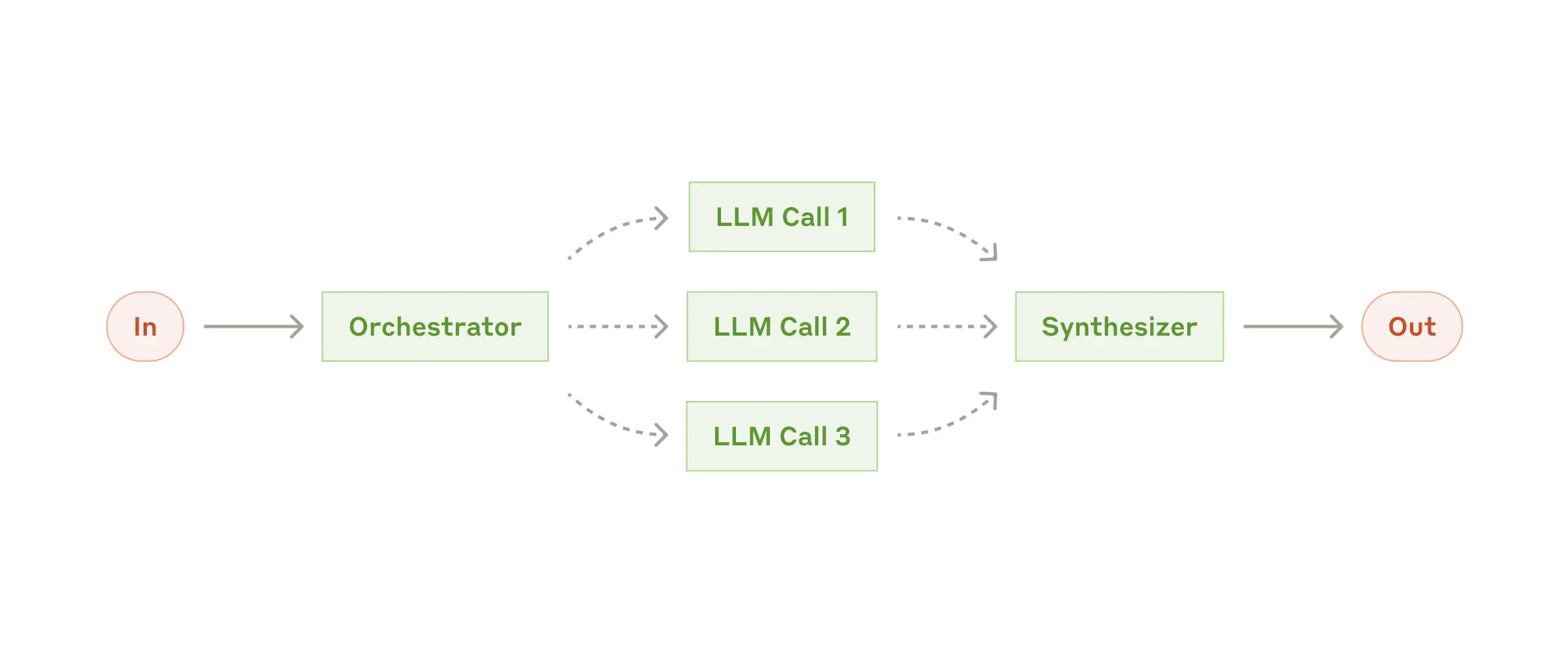

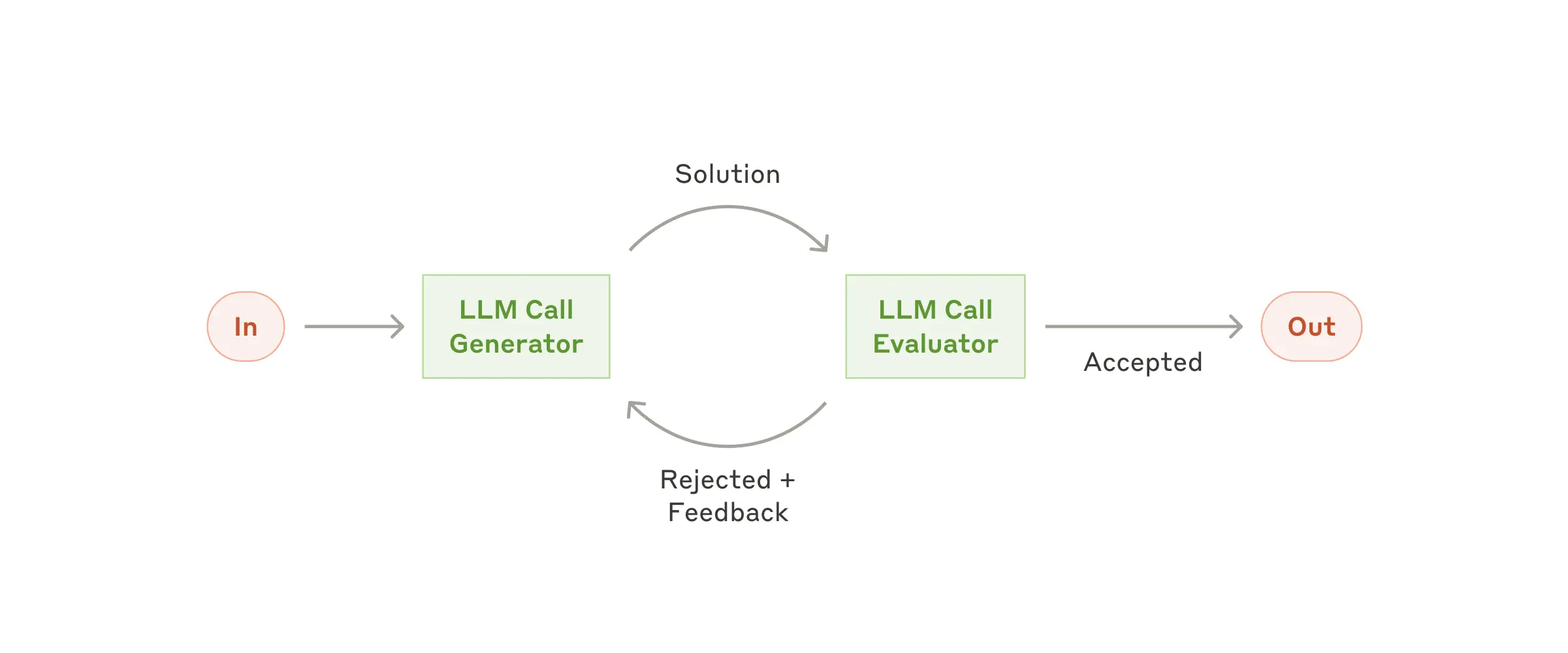

Key agent patterns are implemented as an AugmentedLLM. Use factory helpers to wire them up or inspect the runnable projects listed in gallery.md.

| Pattern | Helper | Summary | Docs |

|---|---|---|---|

| Parallel (Map-Reduce) | create_parallel_llm(...) |

Fan-out specialists and fan-in aggregated reports. |

Parallel |

| Router | create_router_llm(...) / create_router_embedding(...) |

Route requests to the best agent, server, or function. |

Router |

| Intent classifier | create_intent_classifier_llm(...) / create_intent_classifier_embedding(...) |

Bucket user input into intents before automation. | Intent classifier |

| Orchestrator-workers | create_orchestrator(...) |

Generate plans and coordinate worker agents. |

Planner |

| Deep research | create_deep_orchestrator(...) |

Long-horizon research with knowledge extraction and policy checks. | Deep research |

| Evaluator-optimizer | create_evaluator_optimizer_llm(...) |

Iterate until an evaluator approves the result. |

Evaluator-optimizer |

| Swarm | create_swarm(...) |

Multi-agent handoffs compatible with OpenAI Swarm. |

Swarm |

Switch execution_engine to temporal for pause/resume, retries, human input, and durable history—without changing workflow code. Run a worker alongside your app to host activities.

from mcp_agent.executor.temporal import create_temporal_worker_for_app

async with create_temporal_worker_for_app(app) as worker:

await worker.run()Docs: Durable agents • Temporal backend • Examples: examples/temporal.

Expose an MCPApp as a standard MCP server so Claude Desktop, Cursor, or custom clients can call your tools and workflows.

from mcp_agent.server import create_mcp_server_for_app

@app.tool

def grade_story(story: str) -> str:

return "Report..."

if __name__ == "__main__":

server = create_mcp_server_for_app(app)

server.run_stdio()Docs: Agent servers • Examples: examples/mcp_agent_server.

uvx mcp-agent scaffolds projects, manages secrets, inspects workflows, and deploys to Cloud.

uvx mcp-agent init --template basic # Scaffold a new project

uvx mcp-agent deploy my-agent # Deploy to mcp-agent CloudDocs: CLI reference • Getting started guides.

Load API keys from secrets files or use the built-in OAuth client to fetch and persist tokens for MCP servers.

# mcp_agent.config.yaml excerpt

oauth:

providers:

github:

client_id: "${GITHUB_CLIENT_ID}"

client_secret: "${GITHUB_CLIENT_SECRET}"

scopes: ["repo", "user"]Docs: Advanced authentication • Server authentication • Examples: examples/basic/oauth_basic_agent.

Enable structured logging and OpenTelemetry via configuration, and track token usage programmatically.

# mcp_agent.config.yaml

logger:

transports: [console]

level: info

otel:

enabled: true

exporters:

- consoleTokenCounter tracks token usage for agents, workflows, and LLM nodes. Attach watchers to stream updates or trigger alerts.

# Inside `async with app.run() as running_app:`

# token_counter lives on the running app context when tracing is enabled.

token_counter = running_app.context.token_counter

class TokenMonitor:

async def on_token_update(self, node, usage):

print(f"[{node.name}] total={usage.total_tokens}")

monitor = TokenMonitor()

watch_id = await token_counter.watch(

callback=monitor.on_token_update,

node_type="llm",

threshold=1_000,

include_subtree=True,

)

await token_counter.unwatch(watch_id)Docs: Observability • Examples: examples/tracing.

Mix and match AgentSpecs to build higher-level workflows using the factory helpers—routers, parallel pipelines, orchestrators, and more.

from mcp_agent.workflows.factory import create_router_llm

# specs are loaded via load_agent_specs_from_file as shown above.

async with app.run() as running_app:

router = await create_router_llm(

agents=specs,

provider="openai",

context=running_app.context,

)Docs: Workflow composition • Examples: examples/basic/agent_factory.

Pause workflows for approvals or extra data. Temporal stores state durably until an operator resumes the run.

from mcp_agent.human_input.types import HumanInputRequest

response = await self.context.request_human_input(

HumanInputRequest(

prompt="Approve the draft?",

required=True,

metadata={"workflow_id": self.context.workflow_id},

)

)Resume with mcp-agent cloud workflows resume … --payload '{"content": "approve"}'. Docs: Deploy agents – human input • Examples: examples/human_input/temporal.

Build Settings objects programmatically when you need dynamic config (tests, multi-tenant hosts) instead of YAML files.

from mcp_agent.config import Settings, MCPSettings, MCPServerSettings

settings = Settings(

execution_engine="asyncio",

mcp=MCPSettings(

servers={

"fetch": MCPServerSettings(command="uvx", args=["mcp-server-fetch"]),

}

),

)

app = MCPApp(name="configured_app", settings=settings)Docs: Configuring your application.

Add icons to agents and tools so MCP clients that support imagery (Claude Desktop, Cursor) render richer UIs.

from base64 import standard_b64encode

from pathlib import Path

from mcp_agent.icons import Icon

icon_data = standard_b64encode(Path("my-icon.png").read_bytes()).decode()

icon = Icon(src=f"data:image/png;base64,{icon_data}", mimeType="image/png", sizes=["64x64"])

app = MCPApp(name="my_app_with_icon", icons=[icon])

@app.tool(icons=[icon])

async def my_tool() -> str:

return "Hello with style"Docs: MCPApp icons • Examples: examples/mcp_agent_server/asyncio.

Use MCPAggregator or gen_client to manage MCP server connections and expose combined tool sets.

from mcp_agent.mcp.mcp_aggregator import MCPAggregator

async with MCPAggregator.create(server_names=["fetch", "filesystem"]) as aggregator:

tools = await aggregator.list_tools()Docs: Connecting to MCP servers • Examples: examples/basic/mcp_server_aggregator.

Deploy to mcp-agent Cloud for managed Temporal execution, secrets, and HTTPS MCP endpoints.

uvx mcp-agent login

uvx mcp-agent deploy my-agent

uvx mcp-agent cloud apps listDocs: Cloud overview • Deployment quickstart • Examples: examples/cloud.

Browse gallery.md for runnable examples, demo videos, and community projects grouped by concept. Every entry cites the docs page and command you need to run it locally.

mcp-agent provides a streamlined approach to building AI agents using capabilities exposed by MCP (Model Context Protocol) servers.

MCP is quite low-level, and this framework handles the mechanics of connecting to servers, working with LLMs, handling external signals (like human input) and supporting persistent state via durable execution. That lets you, the developer, focus on the core business logic of your AI application.

Core benefits:

- 🤝 Interoperability: ensures that any tool exposed by any number of MCP servers can seamlessly plug in to your agents.

- ⛓️ Composability & Customizability: Implements well-defined workflows, but in a composable way that enables compound workflows, and allows full customization across model provider, logging, orchestrator, etc.

- 💻 Programmatic control flow: Keeps things simple as developers just write code instead of thinking in graphs, nodes and edges. For branching logic, you write

ifstatements. For cycles, usewhileloops. - 🖐️ Human Input & Signals: Supports pausing workflows for external signals, such as human input, which are exposed as tool calls an Agent can make.

No, you can use mcp-agent anywhere, since it handles MCPClient creation for you. This allows you to leverage MCP servers outside of MCP hosts like Claude Desktop.

Here's all the ways you can set up your mcp-agent application:

You can expose mcp-agent applications as MCP servers themselves (see example), allowing MCP clients to interface with sophisticated AI workflows using the standard tools API of MCP servers. This is effectively a server-of-servers.

You can embed mcp-agent in an MCP client directly to manage the orchestration across multiple MCP servers.

You can use mcp-agent applications in a standalone fashion (i.e. they aren't part of an MCP client). The examples are all standalone applications.

Run uvx mcp-agent deploy <app-name> after logging in with uvx mcp-agent login. The CLI packages your project, provisions secrets, and exposes an MCP endpoint backed by a durable Temporal runtime. See the [Cloud quickstart](https://docs.mcp-agent.com/get-started/

cloud) for step-by-step screenshots and CLI output.

Every class, decorator, and CLI command is documented on docs.mcp-agent.com. The API reference and the llms-full.txt are designed so LLMs (or you) can ingest the whole surface area easily.

I debated naming this project silsila (سلسلہ), which means chain of events in Urdu. mcp-agent is more matter-of-fact, but there's still an easter egg in the project paying homage to silsila.

We welcome contributions of every size—bug fixes, new examples, docs, or feature requests. Start with CONTRIBUTING.md, open a discussion, or drop by Discord.

mcp-agent would not be possible without the tireless efforts of the many open source contributors. Thank you!