diff --git a/.github/workflows/build.yaml b/.github/workflows/build.yaml

index 1227b03dc..83436efc0 100644

--- a/.github/workflows/build.yaml

+++ b/.github/workflows/build.yaml

@@ -21,9 +21,9 @@ name: build

on:

# Triggers the workflow on push or pull request events but only for the master branch

push:

- branches: [ master ]

+ branches: [ "master" ]

pull_request:

- branches: [ master ]

+ branches: [ "master" ]

# Allows you to run this workflow manually from the Actions tab

workflow_dispatch:

@@ -33,59 +33,57 @@ jobs:

# This workflow contains a single job called "build"

build:

# The type of runner that the job will run on

- runs-on: ubuntu-latest

+ runs-on: ubuntu-22.04

strategy:

matrix:

- python-version: [3.7]

- # tf-nightly has some pip version conflicts, so can't be installed.

- # Use only numbered TF as of now.

- # tf-version: ["2.4.*", "tf-nightly"]

- tf-version: ["2.4.*"]

+ python-version: [ '3.10' ]

+ # Which tf-version run.

+ tf-version: [ '2.17.0' ]

# Which set of tests to run.

- trax-test: ["lib", "research"]

+ trax-test: [ 'lib','research' ]

# Steps represent a sequence of tasks that will be executed as part of the job

steps:

- # Checks-out your repository under $GITHUB_WORKSPACE, so your job can access it

- - uses: actions/checkout@v2

- - name: Set up Python ${{ matrix.python-version }}

- uses: actions/setup-python@v2

- with:

- python-version: ${{ matrix.python-version }}

- - name: Install dependencies

- run: |

- python -m pip install --upgrade pip

- python -m pip install -q -U setuptools numpy

- python -m pip install flake8 pytest

- if [[ ${{matrix.tf-version}} == "tf-nightly" ]]; then python -m pip install tf-nightly; else python -m pip install -q "tensorflow=="${{matrix.tf-version}}; fi

- pip install -e .[tests,t5]

- # # Lint with flake8

- # - name: Lint with flake8

- # run: |

- # # stop the build if there are Python syntax errors or undefined names

- # flake8 . --count --select=E9,F63,F7,F82 --show-source --statistics

- # # exit-zero treats all errors as warnings. The GitHub editor is 127 chars wide

- # flake8 . --count --exit-zero --max-complexity=10 --max-line-length=127 --statistics

- # Test out right now with only testing one directory.

- - name: Test with pytest

- run: |

- TRAX_TEST=" ${{matrix.trax-test}}" ./oss_scripts/oss_tests.sh

- # The below step just reports the success or failure of tests as a "commit status".

- # This is needed for copybara integration.

- - name: Report success or failure as github status

- if: always()

- shell: bash

- run: |

- status="${{ job.status }}"

- lowercase_status=$(echo $status | tr '[:upper:]' '[:lower:]')

- curl -sS --request POST \

- --url https://api.github.com/repos/${{ github.repository }}/statuses/${{ github.sha }} \

- --header 'authorization: Bearer ${{ secrets.GITHUB_TOKEN }}' \

- --header 'content-type: application/json' \

- --data '{

- "state": "'$lowercase_status'",

- "target_url": "https://github.com/${{ github.repository }}/actions/runs/${{ github.run_id }}",

- "description": "'$status'",

- "context": "github-actions/build"

- }'

+ # Checks-out your repository under $GITHUB_WORKSPACE, so your job can access it

+ - uses: actions/checkout@v3

+ - name: Set up Python ${{matrix.python-version}}

+ uses: actions/setup-python@v5

+ with:

+ python-version: ${{matrix.python-version}}

+ cache: 'pip'

+ - name: Install dependencies

+ env:

+ PIP_DISABLE_PIP_VERSION_CHECK: '1'

+ run: |

+ python -m pip install -U pip

+ # Install TensorFlow matching matrix version.

+ python -m pip install "tensorflow==${{ matrix.tf-version }}"

+ # Install package in editable mode with test and T5 extras (tests use T5 preprocessors).

+ python -m pip install -e .[tests,t5,rl]

+ # Test out right now with only testing one directory.

+ - name: Install trax package

+ run: |

+ python -m pip install -e .

+ - name: Test with pytest

+ working-directory: .

+ run: |

+ TRAX_TEST="${{matrix.trax-test}}" ./oss_scripts/oss_tests.sh

+ # The below step just reports the success or failure of tests as a "commit status".

+ # This is needed for copy bara integration.

+ - name: Report success or failure as github status

+ if: always()

+ shell: bash

+ run: |

+ status="${{ job.status }}"

+ lowercase_status=$(echo $status | tr '[:upper:]' '[:lower:]')

+ curl -sS --request POST \

+ --url https://api.github.com/repos/${{github.repository}}/statuses/${{github.sha}} \

+ --header 'authorization: Bearer ${{ secrets.GITHUB_TOKEN }}' \

+ --header 'content-type: application/json' \

+ --data '{

+ "state": "'$lowercase_status'",

+ "target_url": "https://github.com/${{github.repository}}/actions/runs/${{github.run_id}}",

+ "description": "'$status'",

+ "context": "github-actions/build"

+ }'

diff --git a/.github/workflows/codeql.yml b/.github/workflows/codeql.yml

new file mode 100644

index 000000000..5bc0ab766

--- /dev/null

+++ b/.github/workflows/codeql.yml

@@ -0,0 +1,82 @@

+# For most projects, this workflow file will not need changing; you simply need

+# to commit it to your repository.

+#

+# You may wish to alter this file to override the set of languages analyzed,

+# or to provide custom queries or build logic.

+#

+# ******** NOTE ********

+# We have attempted to detect the languages in your repository. Please check

+# the `language` matrix defined below to confirm you have the correct set of

+# supported CodeQL languages.

+#

+name: "CodeQL"

+

+on:

+ push:

+ branches: [ "1.5.1" ]

+ pull_request:

+ # The branches below must be a subset of the branches above

+ branches: [ "1.5.1" ]

+ schedule:

+ - cron: '31 4 * * 1'

+

+jobs:

+ analyze:

+ name: Analyze

+ # Runner size impacts CodeQL analysis time. To learn more, please see:

+ # - https://gh.io/recommended-hardware-resources-for-running-codeql

+ # - https://gh.io/supported-runners-and-hardware-resources

+ # - https://gh.io/using-larger-runners

+ # Consider using larger runners for possible analysis time improvements.

+ runs-on: ${{ (matrix.language == 'swift' && 'macos-latest') || 'ubuntu-latest' }}

+ timeout-minutes: ${{ (matrix.language == 'swift' && 120) || 360 }}

+ permissions:

+ actions: read

+ contents: read

+ security-events: write

+

+ strategy:

+ fail-fast: false

+ matrix:

+ language: [ 'python' ]

+ # CodeQL supports [ 'c-cpp', 'csharp', 'go', 'java-kotlin', 'javascript-typescript', 'python', 'ruby', 'swift' ]

+ # Use only 'java-kotlin' to analyze code written in Java, Kotlin or both

+ # Use only 'javascript-typescript' to analyze code written in JavaScript, TypeScript or both

+ # Learn more about CodeQL language support at https://aka.ms/codeql-docs/language-support

+

+ steps:

+ - name: Checkout repository

+ uses: actions/checkout@v3

+

+ # Initializes the CodeQL tools for scanning.

+ - name: Initialize CodeQL

+ uses: github/codeql-action/init@v2

+ with:

+ languages: ${{ matrix.language }}

+ # If you wish to specify custom queries, you can do so here or in a config file.

+ # By default, queries listed here will override any specified in a config file.

+ # Prefix the list here with "+" to use these queries and those in the config file.

+

+ # For more details on CodeQL's query packs, refer to: https://docs.github.com/en/code-security/code-scanning/automatically-scanning-your-code-for-vulnerabilities-and-errors/configuring-code-scanning#using-queries-in-ql-packs

+ # queries: security-extended,security-and-quality

+

+

+ # Autobuild attempts to build any compiled languages (C/C++, C#, Go, Java, or Swift).

+ # If this step fails, then you should remove it and run the build manually (see below)

+ - name: Autobuild

+ uses: github/codeql-action/autobuild@v2

+

+ # ℹ️ Command-line programs to run using the OS shell.

+ # 📚 See https://docs.github.com/en/actions/using-workflows/workflow-syntax-for-github-actions#jobsjob_idstepsrun

+

+ # If the Autobuild fails above, remove it and uncomment the following three lines.

+ # modify them (or add more) to build your code if your project, please refer to the EXAMPLE below for guidance.

+

+ # - run: |

+ # echo "Run, Build Application using script"

+ # ./location_of_script_within_repo/buildscript.sh

+

+ - name: Perform CodeQL Analysis

+ uses: github/codeql-action/analyze@v2

+ with:

+ category: "/language:${{matrix.language}}"

diff --git a/.readthedocs.yaml b/.readthedocs.yaml

index d9de2c1be..f6b5ff3c5 100644

--- a/.readthedocs.yaml

+++ b/.readthedocs.yaml

@@ -28,6 +28,6 @@ formats: all

# Optionally set the version of Python and requirements required to build your docs

python:

- version: 3.7

+ version: 3.10

install:

- requirements: docs/requirements.txt

diff --git a/.travis.yml b/.travis.yml

index 0251cb069..50cfa6499 100644

--- a/.travis.yml

+++ b/.travis.yml

@@ -5,10 +5,10 @@ git:

depth: 3

quiet: true

python:

- - "3.6"

+ - "3.10"

env:

global:

- - TF_VERSION="2.4.*"

+ - TF_VERSION="2.11.0"

matrix:

- TRAX_TEST="lib"

- TRAX_TEST="research"

diff --git a/README.md b/README.md

index 33884979a..f91c5699d 100644

--- a/README.md

+++ b/README.md

@@ -5,7 +5,7 @@

version](https://badge.fury.io/py/trax.svg)](https://badge.fury.io/py/trax)

[](https://github.com/google/trax/issues)

-

+

[](CONTRIBUTING.md)

[](https://opensource.org/licenses/Apache-2.0)

@@ -26,6 +26,15 @@ Here are a few example notebooks:-

* [**trax.data API explained**](https://github.com/google/trax/blob/master/trax/examples/trax_data_Explained.ipynb) : Explains some of the major functions in the `trax.data` API

* [**Named Entity Recognition using Reformer**](https://github.com/google/trax/blob/master/trax/examples/NER_using_Reformer.ipynb) : Uses a [Kaggle dataset](https://www.kaggle.com/abhinavwalia95/entity-annotated-corpus) for implementing Named Entity Recognition using the [Reformer](https://arxiv.org/abs/2001.04451) architecture.

* [**Deep N-Gram models**](https://github.com/google/trax/blob/master/trax/examples/Deep_N_Gram_Models.ipynb) : Implementation of deep n-gram models trained on Shakespeares works

+* **Graph neural networks**: baseline models available via

+ `trax.models.GraphConvNet`,

+ `trax.models.GraphEdgeNet` for node and edge updates, or the

+ attention-based `trax.models.GraphAttentionNet`.

+* Example Python scripts using these GNNs for MNIST and IMDB classification are

+ in

+ [`resources/examples/python/gnn_mnist/train.py`](resources/examples/python/gnn_mnist/train.py)

+ and

+ [`resources/examples/python/gnn_imdb/train.py`](resources/examples/python/gnn_imdb/train.py).

diff --git a/docs/.readthedocs.yaml b/docs/.readthedocs.yaml

index d9de2c1be..f6b5ff3c5 100644

--- a/docs/.readthedocs.yaml

+++ b/docs/.readthedocs.yaml

@@ -28,6 +28,6 @@ formats: all

# Optionally set the version of Python and requirements required to build your docs

python:

- version: 3.7

+ version: 3.10

install:

- requirements: docs/requirements.txt

diff --git a/docs/source/conf.py b/docs/source/conf.py

index 9901cffd3..d7cb356a1 100644

--- a/docs/source/conf.py

+++ b/docs/source/conf.py

@@ -15,12 +15,12 @@

# -*- coding: utf-8 -*-

#

-# Configuration file for the Sphinx documentation builder.

+# Configuration file for the Sphinx documentation loader.

#

# This file does only contain a selection of the most common options. For a

# full list see the documentation:

# http://www.sphinx-doc.org/en/master/config

-"""Configuration file for Sphinx autodoc API documentation builder."""

+"""Configuration file for Sphinx autodoc API documentation loader."""

# -- Path setup --------------------------------------------------------------

@@ -31,19 +31,20 @@

import os

import sys

-sys.path.insert(0, os.path.abspath('../..'))

+

+sys.path.insert(0, os.path.abspath("../.."))

# -- Project information -----------------------------------------------------

-project = 'Trax'

-copyright = '2020, Google LLC.' # pylint: disable=redefined-builtin

-author = 'The Trax authors'

+project = "Trax"

+copyright = "2020, Google LLC." # pylint: disable=redefined-builtin

+author = "The Trax authors"

# The short X.Y version

-version = ''

+version = ""

# The full version, including alpha/beta/rc tags

-release = ''

+release = ""

# -- General configuration ---------------------------------------------------

@@ -56,23 +57,23 @@

# extensions coming with Sphinx (named 'sphinx.ext.*') or your custom

# ones.

extensions = [

- 'nbsphinx',

- 'sphinx.ext.autodoc',

- 'sphinx.ext.mathjax',

- 'sphinx.ext.napoleon',

+ "nbsphinx",

+ "sphinx.ext.autodoc",

+ "sphinx.ext.mathjax",

+ "sphinx.ext.napoleon",

]

# Add any paths that contain templates here, relative to this directory.

-templates_path = ['_templates']

+templates_path = ["_templates"]

# The suffix(es) of source filenames.

# You can specify multiple suffix as a list of string:

#

# source_suffix = ['.rst', '.md']

-source_suffix = '.rst'

+source_suffix = ".rst"

# The master toctree document.

-master_doc = 'index'

+master_doc = "index"

# The language for content autogenerated by Sphinx. Refer to documentation

# for a list of supported languages.

@@ -95,7 +96,7 @@

# The theme to use for HTML and HTML Help pages. See the documentation for

# a list of builtin themes.

#

-html_theme = 'sphinx_rtd_theme'

+html_theme = "sphinx_rtd_theme"

# Theme options are theme-specific and customize the look and feel of a theme

# further. For a list of options available for each theme, see the

@@ -106,7 +107,7 @@

# Add any paths that contain custom static files (such as style sheets) here,

# relative to this directory. They are copied after the builtin static files,

# so a file named "default.css" will overwrite the builtin "default.css".

-html_static_path = ['_static']

+html_static_path = ["_static"]

# Custom sidebar templates, must be a dictionary that maps document names

# to template names.

@@ -121,8 +122,8 @@

# -- Options for HTMLHelp output ---------------------------------------------

-# Output file base name for HTML help builder.

-htmlhelp_basename = 'Traxdoc'

+# Output file base name for HTML help loader.

+htmlhelp_basename = "Traxdoc"

# -- Options for LaTeX output ------------------------------------------------

@@ -131,15 +132,12 @@

# The paper size ('letterpaper' or 'a4paper').

#

# 'papersize': 'letterpaper',

-

# The font size ('10pt', '11pt' or '12pt').

#

# 'pointsize': '10pt',

-

# Additional stuff for the LaTeX preamble.

#

# 'preamble': '',

-

# Latex figure (float) alignment

#

# 'figure_align': 'htbp',

@@ -149,8 +147,7 @@

# (source start file, target name, title,

# author, documentclass [howto, manual, or own class]).

latex_documents = [

- (master_doc, 'Trax.tex', 'Trax Documentation',

- 'Trax authors', 'manual'),

+ (master_doc, "Trax.tex", "Trax Documentation", "Trax authors", "manual"),

]

@@ -158,10 +155,7 @@

# One entry per manual page. List of tuples

# (source start file, name, description, authors, manual section).

-man_pages = [

- (master_doc, 'trax', 'Trax Documentation',

- [author], 1)

-]

+man_pages = [(master_doc, "trax", "Trax Documentation", [author], 1)]

# -- Options for Texinfo output ----------------------------------------------

@@ -170,9 +164,15 @@

# (source start file, target name, title, author,

# dir menu entry, description, category)

texinfo_documents = [

- (master_doc, 'Trax', 'Trax Documentation',

- author, 'Trax', 'One line description of project.',

- 'Miscellaneous'),

+ (

+ master_doc,

+ "Trax",

+ "Trax Documentation",

+ author,

+ "Trax",

+ "One line description of project.",

+ "Miscellaneous",

+ ),

]

@@ -191,37 +191,36 @@

# epub_uid = ''

# A list of files that should not be packed into the epub file.

-epub_exclude_files = ['search.html']

+epub_exclude_files = ["search.html"]

# -- Extension configuration -------------------------------------------------

-autodoc_member_order = 'bysource'

+autodoc_member_order = "bysource"

autodoc_default_options = {

- 'members': None, # Include all public members.

- 'undoc-members': True, # Include members that lack docstrings.

- 'show-inheritance': True,

- 'special-members': '__call__, __init__',

+ "members": None, # Include all public members.

+ "undoc-members": True, # Include members that lack docstrings.

+ "show-inheritance": True,

+ "special-members": "__call__, __init__",

}

autodoc_mock_imports = [

- 'gin',

- 'jax',

- 'numpy',

- 'scipy',

- 'tensorflow',

- 'tensorflow_datasets',

- 'funcsigs',

- 'trax.tf_numpy',

- 'absl',

- 'gym',

- 'tensor2tensor',

- 'tensorflow_text',

- 'matplotlib',

- 'cloudpickle',

- 't5',

- 'psutil',

+ "gin",

+ "jax",

+ "numpy",

+ "scipy",

+ "tensorflow",

+ "tensorflow_datasets",

+ "funcsigs",

+ "trax.tf",

+ "absl",

+ "gym",

+ "tensor2tensor",

+ "tensorflow_text",

+ "matplotlib",

+ "cloudpickle",

+ "t5",

+ "psutil",

# 'setup',

]

-

diff --git a/oss_scripts/oss_pip_install.sh b/oss_scripts/oss_pip_install.sh

index 839d3d9fc..652fa447d 100755

--- a/oss_scripts/oss_pip_install.sh

+++ b/oss_scripts/oss_pip_install.sh

@@ -15,7 +15,7 @@

#!/bin/bash

set -v # print commands as they're executed

-set -e # fail and exit on any command erroring

+set -e # fail and exit on any command error

: "${TF_VERSION:?}"

diff --git a/oss_scripts/oss_release.sh b/oss_scripts/oss_release.sh

index 9d913ba8f..7b2944fb9 100755

--- a/oss_scripts/oss_release.sh

+++ b/oss_scripts/oss_release.sh

@@ -15,18 +15,18 @@

#!/bin/bash

set -v # print commands as they're executed

-set -e # fail and exit on any command erroring

+set -e # fail and exit on any command error

GIT_COMMIT_ID=${1:-""}

[[ -z $GIT_COMMIT_ID ]] && echo "Must provide a commit" && exit 1

TMP_DIR=$(mktemp -d)

-pushd $TMP_DIR

+pushd "$TMP_DIR"

echo "Cloning trax and checking out commit $GIT_COMMIT_ID"

git clone https://github.com/google/trax.git

cd trax

-git checkout $GIT_COMMIT_ID

+git checkout "$GIT_COMMIT_ID"

python3 -m pip install wheel twine pyopenssl

@@ -42,4 +42,4 @@ python3 -m twine upload dist/*

# Cleanup

rm -rf build/ dist/ trax.egg-info/

popd

-rm -rf $TMP_DIR

+rm -rf "$TMP_DIR"

diff --git a/oss_scripts/oss_tests.sh b/oss_scripts/oss_tests.sh

index ee3bf428f..5db095be5 100755

--- a/oss_scripts/oss_tests.sh

+++ b/oss_scripts/oss_tests.sh

@@ -1,3 +1,5 @@

+#!/bin/bash

+

# Copyright 2022 The Trax Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

@@ -12,8 +14,6 @@

# See the License for the specific language governing permissions and

# limitations under the License.

-#!/bin/bash

-

set -v # print commands as they're executed

# aliases aren't expanded in non-interactive shells by default.

@@ -46,98 +46,53 @@ set_status

# # Run pytest with coverage.

# alias pytest='coverage run -m pytest'

-# Check tests, separate out directories for easy triage.

-

+# Check tests, check each directory of tests separately.

if [[ "${TRAX_TEST}" == "lib" ]]

then

- ## Core Trax and Supervised Learning

+ echo "Testing all framework packages..."

- # Disabled the decoding test for now, since it OOMs.

- # TODO(afrozm): Add the decoding_test.py back again.

-

- # training_test and trainer_lib_test parse flags, so can't use with --ignore

- pytest \

- --ignore=trax/supervised/callbacks_test.py \

- --ignore=trax/supervised/decoding_test.py \

- --ignore=trax/supervised/decoding_timing_test.py \

- --ignore=trax/supervised/trainer_lib_test.py \

- --ignore=trax/supervised/training_test.py \

- trax/supervised

+ ## Core Trax and Supervised Learning

+ pytest tests/data

set_status

- # Testing these separately here.

- pytest \

- trax/supervised/callbacks_test.py \

- trax/supervised/trainer_lib_test.py \

- trax/supervised/training_test.py

+ pytest tests/fastmath

set_status

- pytest trax/data

+ pytest tests/layers

set_status

- # Ignoring acceleration_test's test_chunk_grad_memory since it is taking a

- # lot of time on OSS.

- pytest \

- --deselect=trax/layers/acceleration_test.py::AccelerationTest::test_chunk_grad_memory \

- --deselect=trax/layers/acceleration_test.py::AccelerationTest::test_chunk_memory \

- --ignore=trax/layers/initializers_test.py \

- --ignore=trax/layers/test_utils.py \

- trax/layers

+ pytest tests/learning

set_status

- pytest trax/layers/initializers_test.py

+ pytest tests/models

set_status

- pytest trax/fastmath

+ pytest tests/optimizers

set_status

- pytest trax/optimizers

+ pytest tests/tf

set_status

- # Catch-all for futureproofing.

- pytest \

- --ignore=trax/trax2keras_test.py \

- --ignore=trax/data \

- --ignore=trax/fastmath \

- --ignore=trax/layers \

- --ignore=trax/models \

- --ignore=trax/optimizers \

- --ignore=trax/rl \

- --ignore=trax/supervised \

- --ignore=trax/tf_numpy

+ pytest tests/trainers

set_status

-else

- # Models, RL and misc right now.

- ## Models

- # Disabled tests are quasi integration tests.

- pytest \

- --ignore=trax/models/reformer/reformer_e2e_test.py \

- --ignore=trax/models/reformer/reformer_memory_test.py \

- --ignore=trax/models/research/terraformer_e2e_test.py \

- --ignore=trax/models/research/terraformer_memory_test.py \

- --ignore=trax/models/research/terraformer_oom_test.py \

- trax/models

+ pytest tests/utils/import_test.py

set_status

- ## RL Trax

- pytest trax/rl

+ pytest tests/utils/shapes_test.py

set_status

- ## Trax2Keras

- # TODO(afrozm): Make public again after TF 2.5 releases.

- # pytest trax/trax2keras_test.py

- # set_status

+else

+ echo "No testing ..."

+ # Models, RL and misc right now.

# Check notebooks.

# TODO(afrozm): Add more.

- jupyter nbconvert --ExecutePreprocessor.kernel_name=python3 \

- --ExecutePreprocessor.timeout=600 --to notebook --execute \

- trax/intro.ipynb;

- set_status

+ # jupyter nbconvert --ExecutePreprocessor.kernel_name=python3 \

+ # --ExecutePreprocessor.timeout=600 --to notebook --execute \

+ # trax/intro.ipynb;

+ # set_status

fi

-# TODO(traxers): Test tf-numpy separately.

-

exit $STATUS

diff --git a/pytest.ini b/pytest.ini

new file mode 100644

index 000000000..f284563f6

--- /dev/null

+++ b/pytest.ini

@@ -0,0 +1,2 @@

+[pytest]

+pythonpath = . trax

\ No newline at end of file

diff --git a/trax/data/testdata/bert_uncased_vocab.txt b/resources/data/testdata/bert_uncased_vocab.txt

similarity index 100%

rename from trax/data/testdata/bert_uncased_vocab.txt

rename to resources/data/testdata/bert_uncased_vocab.txt

diff --git a/trax/data/testdata/c4/en/2.3.0/c4-train.tfrecord-00000-of-00001 b/resources/data/testdata/c4/en/2.3.0/c4-train.tfrecord-00000-of-00001

similarity index 100%

rename from trax/data/testdata/c4/en/2.3.0/c4-train.tfrecord-00000-of-00001

rename to resources/data/testdata/c4/en/2.3.0/c4-train.tfrecord-00000-of-00001

diff --git a/trax/data/testdata/c4/en/2.3.0/c4-validation.tfrecord-00000-of-00001 b/resources/data/testdata/c4/en/2.3.0/c4-validation.tfrecord-00000-of-00001

similarity index 100%

rename from trax/data/testdata/c4/en/2.3.0/c4-validation.tfrecord-00000-of-00001

rename to resources/data/testdata/c4/en/2.3.0/c4-validation.tfrecord-00000-of-00001

diff --git a/trax/data/testdata/c4/en/2.3.0/dataset_info.json b/resources/data/testdata/c4/en/2.3.0/dataset_info.json

similarity index 100%

rename from trax/data/testdata/c4/en/2.3.0/dataset_info.json

rename to resources/data/testdata/c4/en/2.3.0/dataset_info.json

diff --git a/trax/data/testdata/corpus-1.txt b/resources/data/testdata/corpus-1.txt

similarity index 100%

rename from trax/data/testdata/corpus-1.txt

rename to resources/data/testdata/corpus-1.txt

diff --git a/trax/data/testdata/corpus-2.txt b/resources/data/testdata/corpus-2.txt

similarity index 100%

rename from trax/data/testdata/corpus-2.txt

rename to resources/data/testdata/corpus-2.txt

diff --git a/trax/data/testdata/en_8k.subword b/resources/data/testdata/en_8k.subword

similarity index 100%

rename from trax/data/testdata/en_8k.subword

rename to resources/data/testdata/en_8k.subword

diff --git a/trax/data/testdata/para_crawl/ende/1.2.0/dataset_info.json b/resources/data/testdata/para_crawl/ende/1.2.0/dataset_info.json

similarity index 100%

rename from trax/data/testdata/para_crawl/ende/1.2.0/dataset_info.json

rename to resources/data/testdata/para_crawl/ende/1.2.0/dataset_info.json

diff --git a/trax/data/testdata/para_crawl/ende/1.2.0/features.json b/resources/data/testdata/para_crawl/ende/1.2.0/features.json

similarity index 100%

rename from trax/data/testdata/para_crawl/ende/1.2.0/features.json

rename to resources/data/testdata/para_crawl/ende/1.2.0/features.json

diff --git a/trax/data/testdata/para_crawl/ende/1.2.0/para_crawl-train.tfrecord-00000-of-00001 b/resources/data/testdata/para_crawl/ende/1.2.0/para_crawl-train.tfrecord-00000-of-00001

similarity index 100%

rename from trax/data/testdata/para_crawl/ende/1.2.0/para_crawl-train.tfrecord-00000-of-00001

rename to resources/data/testdata/para_crawl/ende/1.2.0/para_crawl-train.tfrecord-00000-of-00001

diff --git a/trax/data/testdata/sentencepiece.model b/resources/data/testdata/sentencepiece.model

similarity index 100%

rename from trax/data/testdata/sentencepiece.model

rename to resources/data/testdata/sentencepiece.model

diff --git a/trax/data/testdata/squad/v1.1/3.0.0/dataset_info.json b/resources/data/testdata/squad/v1.1/3.0.0/dataset_info.json

similarity index 74%

rename from trax/data/testdata/squad/v1.1/3.0.0/dataset_info.json

rename to resources/data/testdata/squad/v1.1/3.0.0/dataset_info.json

index 3298dab0a..31c889289 100644

--- a/trax/data/testdata/squad/v1.1/3.0.0/dataset_info.json

+++ b/resources/data/testdata/squad/v1.1/3.0.0/dataset_info.json

@@ -1,51 +1,51 @@

{

- "citation": "@article{2016arXiv160605250R,\n author = {{Rajpurkar}, Pranav and {Zhang}, Jian and {Lopyrev},\n Konstantin and {Liang}, Percy},\n title = \"{SQuAD: 100,000+ Questions for Machine Comprehension of Text}\",\n journal = {arXiv e-prints},\n year = 2016,\n eid = {arXiv:1606.05250},\n pages = {arXiv:1606.05250},\narchivePrefix = {arXiv},\n eprint = {1606.05250},\n}\n",

- "description": "Stanford Question Answering Dataset (SQuAD) is a reading comprehension dataset, consisting of questions posed by crowdworkers on a set of Wikipedia articles, where the answer to every question is a segment of text, or span, from the corresponding reading passage, or the question might be unanswerable.\n",

+ "citation": "@article{2016arXiv160605250R,\n author = {{Rajpurkar}, Pranav and {Zhang}, Jian and {Lopyrev},\n Konstantin and {Liang}, Percy},\n title = \"{SQuAD: 100,000+ Questions for Machine Comprehension of Text}\",\n journal = {arXiv e-prints},\n year = 2016,\n eid = {arXiv:1606.05250},\n pages = {arXiv:1606.05250},\narchivePrefix = {arXiv},\n eprint = {1606.05250},\n}\n",

+ "description": "Stanford Question Answering Dataset (SQuAD) is a reading comprehension dataset, consisting of questions posed by crowdworkers on a set of Wikipedia articles, where the answer to every question is a segment of text, or span, from the corresponding reading passage, or the question might be unanswerable.\n",

"location": {

"urls": [

"https://rajpurkar.github.io/SQuAD-explorer/"

]

- },

- "name": "squad",

+ },

+ "name": "squad",

"schema": {

"feature": [

{

"name": "answers"

- },

+ },

{

- "name": "context",

+ "name": "context",

"type": "BYTES"

- },

+ },

{

- "name": "id",

+ "name": "id",

"type": "BYTES"

- },

+ },

{

- "name": "question",

+ "name": "question",

"type": "BYTES"

- },

+ },

{

- "name": "title",

+ "name": "title",

"type": "BYTES"

}

]

- },

- "sizeInBytes": "35142551",

+ },

+ "sizeInBytes": "35142551",

"splits": [

{

- "name": "train",

- "numShards": "1",

+ "name": "train",

+ "numShards": "1",

"shardLengths": [

"10"

]

},

{

- "name": "validation",

- "numShards": "1",

+ "name": "validation",

+ "numShards": "1",

"shardLengths": [

"10"

]

}

- ],

+ ],

"version": "3.0.0"

-}

+}

\ No newline at end of file

diff --git a/trax/data/testdata/squad/v1.1/3.0.0/squad-train.tfrecord-00000-of-00001 b/resources/data/testdata/squad/v1.1/3.0.0/squad-train.tfrecord-00000-of-00001

similarity index 100%

rename from trax/data/testdata/squad/v1.1/3.0.0/squad-train.tfrecord-00000-of-00001

rename to resources/data/testdata/squad/v1.1/3.0.0/squad-train.tfrecord-00000-of-00001

diff --git a/trax/data/testdata/squad/v1.1/3.0.0/squad-validation.tfrecord-00000-of-00001 b/resources/data/testdata/squad/v1.1/3.0.0/squad-validation.tfrecord-00000-of-00001

similarity index 100%

rename from trax/data/testdata/squad/v1.1/3.0.0/squad-validation.tfrecord-00000-of-00001

rename to resources/data/testdata/squad/v1.1/3.0.0/squad-validation.tfrecord-00000-of-00001

diff --git a/trax/data/testdata/vocab-1.txt b/resources/data/testdata/vocab-1.txt

similarity index 100%

rename from trax/data/testdata/vocab-1.txt

rename to resources/data/testdata/vocab-1.txt

diff --git a/trax/data/testdata/vocab-2.txt b/resources/data/testdata/vocab-2.txt

similarity index 100%

rename from trax/data/testdata/vocab-2.txt

rename to resources/data/testdata/vocab-2.txt

diff --git a/trax/models/reformer/testdata/vocab.translate_ende_wmt32k.32768.subwords b/resources/data/testdata/vocab.translate_ende_wmt32k.32768.subwords

similarity index 100%

rename from trax/models/reformer/testdata/vocab.translate_ende_wmt32k.32768.subwords

rename to resources/data/testdata/vocab.translate_ende_wmt32k.32768.subwords

diff --git a/resources/examples/ipynb/Example-0-Introduction.ipynb b/resources/examples/ipynb/Example-0-Introduction.ipynb

new file mode 100644

index 000000000..36a211232

--- /dev/null

+++ b/resources/examples/ipynb/Example-0-Introduction.ipynb

@@ -0,0 +1,664 @@

+{

+ "cells": [

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "7yuytuIllsv1"

+ },

+ "source": [

+ "# Trax Quick Intro\n",

+ "\n",

+ "[Trax](https://trax-ml.readthedocs.io/en/latest/) is an end-to-end library for deep learning that focuses on clear code and speed. It is actively used and maintained in the [Google Brain team](https://research.google.com/teams/brain/). This notebook ([run it in colab](https://colab.research.google.com/github/google/trax/blob/master/trax/intro.ipynb)) shows how to use Trax and where you can find more information.\n",

+ "\n",

+ " 1. **Run a pre-trained Transformer**: create a translator in a few lines of code\n",

+ " 1. **Features and resources**: [API docs](https://trax-ml.readthedocs.io/en/latest/trax.html), where to [talk to us](https://gitter.im/trax-ml/community), how to [open an issue](https://github.com/google/trax/issues) and more\n",

+ " 1. **Walkthrough**: how Trax works, how to make new models and train on your own data\n",

+ "\n",

+ "We welcome **contributions** to Trax! We welcome PRs with code for new models and layers as well as improvements to our code and documentation. We especially love **notebooks** that explain how models work and show how to use them to solve problems!\n",

+ "\n",

+ "\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "BIl27504La0G"

+ },

+ "source": [

+ "**General Setup**\n",

+ "\n",

+ "Execute the following few cells (once) before running any of the code samples."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "executionInfo": {

+ "elapsed": 36794,

+ "status": "ok",

+ "timestamp": 1607149386661,

+ "user": {

+ "displayName": "",

+ "photoUrl": "",

+ "userId": ""

+ },

+ "user_tz": 480

+ },

+ "id": "oILRLCWN_16u"

+ },

+ "outputs": [],

+ "source": [

+ "#@title\n",

+ "# Copyright 2020 Google LLC.\n",

+ "\n",

+ "# Licensed under the Apache License, Version 2.0 (the \"License\");\n",

+ "# you may not use this file except in compliance with the License.\n",

+ "# You may obtain a copy of the License at\n",

+ "\n",

+ "# https://www.apache.org/licenses/LICENSE-2.0\n",

+ "\n",

+ "# Unless required by applicable law or agreed to in writing, software\n",

+ "# distributed under the License is distributed on an \"AS IS\" BASIS,\n",

+ "# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n",

+ "# See the License for the specific language governing permissions and\n",

+ "# limitations under the License."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "executionInfo": {

+ "elapsed": 463,

+ "status": "ok",

+ "timestamp": 1607149387132,

+ "user": {

+ "displayName": "",

+ "photoUrl": "",

+ "userId": ""

+ },

+ "user_tz": 480

+ },

+ "id": "vlGjGoGMTt-D",

+ "outputId": "3076e638-695d-4017-e757-98d929630e17"

+ },

+ "outputs": [],

+ "source": [

+ "import os\n",

+ "import numpy as np\n",

+ "import sys"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# For example, if trax is inside a 'src' directory\n",

+ "project_root = os.environ.get('TRAX_PROJECT_ROOT', '')\n",

+ "sys.path.insert(0, project_root)\n",

+ "\n",

+ "# Option to verify the import path\n",

+ "print(f\"Python will look for packages in: {sys.path[0]}\")\n",

+ "\n",

+ "# Import trax\n",

+ "import trax\n",

+ "from trax.data.encoder import encoder\n",

+ "from trax.learning.supervised import decoding as decoding\n",

+ "from trax import models as models\n",

+ "\n",

+ "# Verify the source of the imported package\n",

+ "print(f\"Imported trax from: {trax.__file__}\")"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "-LQ89rFFsEdk"

+ },

+ "source": [

+ "## 1. Run a pre-trained Transformer\n",

+ "\n",

+ "Here is how you create an Engligh-German translator in a few lines of code:\n",

+ "\n",

+ "* create a Transformer model in Trax with [trax.models.Transformer](https://trax-ml.readthedocs.io/en/latest/trax.models.html#trax.models.transformer.Transformer)\n",

+ "* initialize it from a file with pre-trained weights with [model.init_from_file](https://trax-ml.readthedocs.io/en/latest/trax.layers.html#trax.layers.base.Layer.init_from_file)\n",

+ "* tokenize your input sentence to input into the model with [trax.data.tokenize](https://trax-ml.readthedocs.io/en/latest/trax.data.html#trax.data.tf_inputs.tokenize)\n",

+ "* decode from the Transformer with [trax.supervised.decoding.autoregressive_sample](https://trax-ml.readthedocs.io/en/latest/trax.supervised.html#trax.supervised.decoding.autoregressive_sample)\n",

+ "* de-tokenize the decoded result to get the translation with [trax.data.detokenize](https://trax-ml.readthedocs.io/en/latest/trax.data.html#trax.data.tf_inputs.detokenize)\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "executionInfo": {

+ "elapsed": 46373,

+ "status": "ok",

+ "timestamp": 1607149433512,

+ "user": {

+ "displayName": "",

+ "photoUrl": "",

+ "userId": ""

+ },

+ "user_tz": 480

+ },

+ "id": "djTiSLcaNFGa",

+ "outputId": "a7917337-0a77-4064-8a6e-4e44e4a9c7c7"

+ },

+ "outputs": [],

+ "source": [

+ "# Create a Transformer model.\n",

+ "# Pre-trained model config in gs://trax-ml/models/translation/ende_wmt32k.gin\n",

+ "model = models.Transformer(\n",

+ " input_vocab_size=33300,\n",

+ " d_model=512, d_ff=2048,\n",

+ " n_heads=8, n_encoder_layers=6, n_decoder_layers=6,\n",

+ " max_len=2048, mode='predict')\n",

+ "\n",

+ "# Initialize using pre-trained weights.\n",

+ "model.init_from_file('gs://trax-ml/models/translation/ende_wmt32k.pkl.gz',\n",

+ " weights_only=True)\n",

+ "\n",

+ "# Tokenize a sentence.\n",

+ "sentence = 'It is nice to learn new things today!'\n",

+ "tokenized = list(encoder.tokenize(iter([sentence]), # Operates on streams.\n",

+ " vocab_dir='gs://trax-ml/vocabs/',\n",

+ " vocab_file='ende_32k.subword'))[0]\n",

+ "\n",

+ "# Decode from the Transformer.\n",

+ "tokenized = tokenized[None, :] # Add batch dimension.\n",

+ "tokenized_translation = decoding.autoregressive_sample(\n",

+ " model, tokenized, temperature=0.0) # Higher temperature: more diverse results.\n",

+ "\n",

+ "# De-tokenize,\n",

+ "tokenized_translation = tokenized_translation[0][:-1] # Remove batch and EOS.\n",

+ "translation = encoder.detokenize(tokenized_translation,\n",

+ " vocab_dir='gs://trax-ml/vocabs/',\n",

+ " vocab_file='ende_32k.subword')\n",

+ "print(translation)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "QMo3OnsGgLNK"

+ },

+ "source": [

+ "## 2. Features and resources\n",

+ "\n",

+ "Trax includes basic models (like [ResNet](https://github.com/google/trax/blob/master/trax/models/resnet.py#L70), [LSTM](https://github.com/google/trax/blob/master/trax/models/rnn.py#L100), [Transformer](https://github.com/google/trax/blob/master/trax/models/transformer.py#L189) and RL algorithms\n",

+ "(like [REINFORCE](https://github.com/google/trax/blob/master/trax/rl/training.py#L244), [A2C](https://github.com/google/trax/blob/master/trax/rl/actor_critic_joint.py#L458), [PPO](https://github.com/google/trax/blob/master/trax/rl/actor_critic_joint.py#L209)). It is also actively used for research and includes\n",

+ "new models like the [Reformer](https://github.com/google/trax/tree/master/trax/models/reformer) and new RL algorithms like [AWR](https://arxiv.org/abs/1910.00177). Trax has bindings to a large number of deep learning datasets, including\n",

+ "[Tensor2Tensor](https://github.com/tensorflow/tensor2tensor) and [TensorFlow datasets](https://www.tensorflow.org/datasets/catalog/overview).\n",

+ "\n",

+ "\n",

+ "You can use Trax either as a library from your own python scripts and notebooks\n",

+ "or as a binary from the shell, which can be more convenient for training large models.\n",

+ "It runs without any changes on CPUs, GPUs and TPUs.\n",

+ "\n",

+ "* [API docs](https://trax-ml.readthedocs.io/en/latest/)\n",

+ "* [chat with us](https://gitter.im/trax-ml/community)\n",

+ "* [open an issue](https://github.com/google/trax/issues)\n",

+ "* subscribe to [trax-discuss](https://groups.google.com/u/1/g/trax-discuss) for news\n",

+ "\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "8wgfJyhdihfR"

+ },

+ "source": [

+ "## 3. Walkthrough\n",

+ "\n",

+ "You can learn here how Trax works, how to create new models and how to train them on your own data."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "yM12hgQnp4qo"

+ },

+ "source": [

+ "### Tensors and Fast Math\n",

+ "\n",

+ "The basic units flowing through Trax models are *tensors* - multi-dimensional arrays, sometimes also known as numpy arrays, due to the most widely used package for tensor operations -- `numpy`. You should take a look at the [numpy guide](https://numpy.org/doc/stable/user/quickstart.html) if you don't know how to operate on tensors: Trax also uses the numpy API for that.\n",

+ "\n",

+ "In Trax we want numpy operations to run very fast, making use of GPUs and TPUs to accelerate them. We also want to automatically compute gradients of functions on tensors. This is done in the `trax.fastmath` package thanks to its backends -- [JAX](https://github.com/google/jax) and [TensorFlow numpy](https://tensorflow.org)."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "executionInfo": {

+ "elapsed": 667,

+ "status": "ok",

+ "timestamp": 1607149434186,

+ "user": {

+ "displayName": "",

+ "photoUrl": "",

+ "userId": ""

+ },

+ "user_tz": 480

+ },

+ "id": "kSauPt0NUl_o",

+ "outputId": "c7288312-767d-4344-91ae-95ebf386ce57"

+ },

+ "outputs": [],

+ "source": [

+ "from trax.fastmath import numpy as fastnp\n",

+ "\n",

+ "trax.fastmath.use_backend('jax') # Can be 'jax' or 'tensorflow-numpy'.\n",

+ "\n",

+ "matrix = fastnp.array([[1, 2, 3], [4, 5, 6], [7, 8, 9]])\n",

+ "print(f'matrix =\\n{matrix}')\n",

+ "vector = fastnp.ones(3)\n",

+ "print(f'vector = {vector}')\n",

+ "product = fastnp.dot(vector, matrix)\n",

+ "print(f'product = {product}')\n",

+ "tanh = fastnp.tanh(product)\n",

+ "print(f'tanh(product) = {tanh}')"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "snLYtU6OsKU2"

+ },

+ "source": [

+ "Gradients can be calculated using `trax.fastmath.grad`."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "executionInfo": {

+ "elapsed": 545,

+ "status": "ok",

+ "timestamp": 1607149434742,

+ "user": {

+ "displayName": "",

+ "photoUrl": "",

+ "userId": ""

+ },

+ "user_tz": 480

+ },

+ "id": "cqjYoxPEu8PG",

+ "outputId": "04739509-9d3a-446d-d088-84882b8917bc"

+ },

+ "outputs": [],

+ "source": [

+ "def f(x):\n",

+ " return 2.0 * x * x\n",

+ "\n",

+ "\n",

+ "grad_f = trax.fastmath.grad(f)\n",

+ "\n",

+ "print(f'grad(2x^2) at 1 = {grad_f(1.0)}')\n",

+ "print(f'grad(2x^2) at -2 = {grad_f(-2.0)}')"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "p-wtgiWNseWw"

+ },

+ "source": [

+ "### Layers\n",

+ "\n",

+ "Layers are basic building blocks of Trax models. You will learn all about them in the [layers intro](https://trax-ml.readthedocs.io/en/latest/notebooks/layers_intro.html) but for now, just take a look at the implementation of one core Trax layer, `Embedding`:\n",

+ "\n",

+ "```\n",

+ "class Embedding(base.Layer):\n",

+ " \"\"\"Trainable layer that maps discrete tokens/IDs to vectors.\"\"\"\n",

+ "\n",

+ " def __init__(self,\n",

+ " vocab_size,\n",

+ " d_feature,\n",

+ " kernel_initializer=init.RandomNormalInitializer(1.0)):\n",

+ " \"\"\"Returns an embedding layer with given vocabulary size and vector size.\n",

+ "\n",

+ " Args:\n",

+ " vocab_size: Size of the input vocabulary. The layer will assign a unique\n",

+ " vector to each id in `range(vocab_size)`.\n",

+ " d_feature: Dimensionality/depth of the output vectors.\n",

+ " kernel_initializer: Function that creates (random) initial vectors for\n",

+ " the embedding.\n",

+ " \"\"\"\n",

+ " super().__init__(name=f'Embedding_{vocab_size}_{d_feature}')\n",

+ " self._d_feature = d_feature # feature dimensionality\n",

+ " self._vocab_size = vocab_size\n",

+ " self._kernel_initializer = kernel_initializer\n",

+ "\n",

+ " def forward(self, x):\n",

+ " \"\"\"Returns embedding vectors corresponding to input token IDs.\n",

+ "\n",

+ " Args:\n",

+ " x: Tensor of token IDs.\n",

+ "\n",

+ " Returns:\n",

+ " Tensor of embedding vectors.\n",

+ " \"\"\"\n",

+ " return jnp.take(self.weights, x, axis=0, mode='clip')\n",

+ "\n",

+ " def init_weights_and_state(self, input_signature):\n",

+ " \"\"\"Randomly initializes this layer's weights.\"\"\"\n",

+ " del input_signature\n",

+ " shape_w = (self._vocab_size, self._d_feature)\n",

+ " w = self._kernel_initializer(shape_w, self.rng)\n",

+ " self.weights = w\n",

+ "```\n",

+ "\n",

+ "Layers with trainable weights like `Embedding` need to be initialized with the signature (shape and dtype) of the input, and then can be run by calling them.\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "executionInfo": {

+ "elapsed": 598,

+ "status": "ok",

+ "timestamp": 1607149436202,

+ "user": {

+ "displayName": "",

+ "photoUrl": "",

+ "userId": ""

+ },

+ "user_tz": 480

+ },

+ "id": "4MLSQsIiw9Aw",

+ "outputId": "394efc9d-9e3c-4f8c-80c2-ce3b5a935e38"

+ },

+ "outputs": [],

+ "source": [

+ "from trax import layers as tl\n",

+ "from trax.utils import shapes\n",

+ "\n",

+ "# Create an input tensor x.\n",

+ "x = np.arange(15)\n",

+ "print(f'x = {x}')\n",

+ "\n",

+ "# Create the embedding layer.\n",

+ "embedding = tl.Embedding(vocab_size=20, d_feature=32)\n",

+ "embedding.init(trax.utils.shapes.signature(x))\n",

+ "\n",

+ "# Run the layer -- y = embedding(x).\n",

+ "y = embedding(x)\n",

+ "print(f'shape of y = {y.shape}')"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "MgCPl9ZOyCJw"

+ },

+ "source": [

+ "### Models\n",

+ "\n",

+ "Models in Trax are built from layers most often using the `Serial` and `Branch` combinators. You can read more about those combinators in the [layers intro](https://trax-ml.readthedocs.io/en/latest/notebooks/layers_intro.html) and\n",

+ "see the code for many models in `trax/models/`, e.g., this is how the [Transformer Language Model](https://github.com/google/trax/blob/master/trax/models/transformer.py#L167) is implemented. Below is an example of how to build a sentiment classification model."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "executionInfo": {

+ "elapsed": 473,

+ "status": "ok",

+ "timestamp": 1607149436685,

+ "user": {

+ "displayName": "",

+ "photoUrl": "",

+ "userId": ""

+ },

+ "user_tz": 480

+ },

+ "id": "WoSz5plIyXOU",

+ "outputId": "f94c84c4-3224-4231-8879-4a68f328b89e"

+ },

+ "outputs": [],

+ "source": [

+ "model = tl.Serial(\n",

+ " tl.Embedding(vocab_size=8192, d_feature=256),\n",

+ " tl.Mean(axis=1), # Average on axis 1 (length of sentence).\n",

+ " tl.Dense(2), # Classify 2 classes.\n",

+ ")\n",

+ "\n",

+ "# You can print model structure.\n",

+ "print(model)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "FcnIjFLD0Ju1"

+ },

+ "source": [

+ "### Data\n",

+ "\n",

+ "To train your model, you need data. In Trax, data streams are represented as python iterators, so you can call `next(data_stream)` and get a tuple, e.g., `(inputs, targets)`. Trax allows you to use [TensorFlow Datasets](https://www.tensorflow.org/datasets) easily and you can also get an iterator from your own text file using the standard `open('my_file.txt')`."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "executionInfo": {

+ "elapsed": 19863,

+ "status": "ok",

+ "timestamp": 1607149456555,

+ "user": {

+ "displayName": "",

+ "photoUrl": "",

+ "userId": ""

+ },

+ "user_tz": 480

+ },

+ "id": "pKITF1jR0_Of",

+ "outputId": "44a73b25-668d-4f85-9133-ebb0f5edd191"

+ },

+ "outputs": [],

+ "source": [

+ "from trax.data.loader.tf import base as dataset\n",

+ "\n",

+ "train_stream = dataset.TFDS('imdb_reviews', keys=('text', 'label'), train=True)()\n",

+ "eval_stream = dataset.TFDS('imdb_reviews', keys=('text', 'label'), train=False)()\n",

+ "print(next(train_stream)) # See one example."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "fRGj4Skm1kL4"

+ },

+ "source": [

+ "Using the `trax.data` module you can create input processing pipelines, e.g., to tokenize and shuffle your data. You create data pipelines using `trax.data.Serial` and they are functions that you apply to streams to create processed streams."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "executionInfo": {

+ "elapsed": 1746,

+ "status": "ok",

+ "timestamp": 1607149458319,

+ "user": {

+ "displayName": "",

+ "photoUrl": "",

+ "userId": ""

+ },

+ "user_tz": 480

+ },

+ "id": "AV5wrgjZ10yU",

+ "outputId": "82b8e3bc-7812-4cd3-a669-401fef29f1c0"

+ },

+ "outputs": [],

+ "source": [

+ "from trax.data.preprocessing import inputs as preprocessing\n",

+ "from trax.data.encoder import encoder\n",

+ "\n",

+ "data_pipeline = preprocessing.Serial(\n",

+ " encoder.Tokenize(vocab_file='en_8k.subword', keys=[0]),\n",

+ " preprocessing.Shuffle(),\n",

+ " preprocessing.FilterByLength(max_length=2048, length_keys=[0]),\n",

+ " preprocessing.BucketByLength(boundaries=[32, 128, 512, 2048],\n",

+ " batch_sizes=[512, 128, 32, 8, 1],\n",

+ " length_keys=[0]),\n",

+ " preprocessing.AddLossWeights()\n",

+ ")\n",

+ "train_batches_stream = data_pipeline(train_stream)\n",

+ "eval_batches_stream = data_pipeline(eval_stream)\n",

+ "example_batch = next(train_batches_stream)\n",

+ "print(example_batch)\n",

+ "#print(f'shapes = {[x.shape for x in example_batch]}') # Check the shapes."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "l25krioP2twf"

+ },

+ "source": [

+ "### Supervised training\n",

+ "\n",

+ "When you have the model and the data, use `trax.supervised.training` to define training and eval tasks and create a training loop. The Trax training loop optimizes training and will create TensorBoard logs and model checkpoints for you."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "executionInfo": {

+ "elapsed": 43631,

+ "status": "ok",

+ "timestamp": 1607149504226,

+ "user": {

+ "displayName": "",

+ "photoUrl": "",

+ "userId": ""

+ },

+ "user_tz": 480

+ },

+ "id": "d6bIKUO-3Cw8",

+ "outputId": "038e6ad5-0d2f-442b-ffa1-ed431dc1d2e0"

+ },

+ "outputs": [],

+ "source": [

+ "from trax.learning.supervised import training\n",

+ "\n",

+ "# Training task.\n",

+ "train_task = training.TrainTask(\n",

+ " labeled_data=train_batches_stream,\n",

+ " loss_layer=tl.WeightedCategoryCrossEntropy(),\n",

+ " optimizer=trax.optimizers.Adam(0.01),\n",

+ " n_steps_per_checkpoint=500,\n",

+ ")\n",

+ "\n",

+ "# Evaluaton task.\n",

+ "eval_task = training.EvalTask(\n",

+ " labeled_data=eval_batches_stream,\n",

+ " metrics=[tl.WeightedCategoryCrossEntropy(), tl.WeightedCategoryAccuracy()],\n",

+ " n_eval_batches=20 # For less variance in eval numbers.\n",

+ ")\n",

+ "\n",

+ "# Training loop saves checkpoints to output_dir.\n",

+ "output_dir = os.path.expanduser('~/output_dir/')\n",

+ "!rm -rf {output_dir}\n",

+ "training_loop = training.Loop(model,\n",

+ " train_task,\n",

+ " eval_tasks=[eval_task],\n",

+ " output_dir=output_dir)\n",

+ "\n",

+ "# Run 2000 steps (batches).\n",

+ "training_loop.run(2000)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "-aCkIu3x686C"

+ },

+ "source": [

+ "After training the model, run it like any layer to get results."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "executionInfo": {

+ "elapsed": 1683,

+ "status": "ok",

+ "timestamp": 1607149514303,

+ "user": {

+ "displayName": "",

+ "photoUrl": "",

+ "userId": ""

+ },

+ "user_tz": 480

+ },

+ "id": "yuPu37Lp7GST",

+ "outputId": "fdc4d832-2f1d-4aee-87b5-9c9dc1238503"

+ },

+ "outputs": [],

+ "source": [

+ "example_input = next(eval_batches_stream)[0][0]\n",

+ "example_input_str = encoder.detokenize(example_input, vocab_file='en_8k.subword')\n",

+ "print(f'example input_str: {example_input_str}')\n",

+ "sentiment_log_probs = model(example_input[None, :]) # Add batch dimension.\n",

+ "print(f'Model returned sentiment probabilities: {np.exp(sentiment_log_probs)}')"

+ ]

+ }

+ ],

+ "metadata": {

+ "colab": {

+ "collapsed_sections": [],

+ "last_runtime": {

+ "build_target": "//learning/deepmind/public/tools/ml_python:ml_notebook",

+ "kind": "private"

+ },

+ "name": "Trax Quick Intro",

+ "provenance": [

+ {

+ "file_id": "trax/intro.ipynb",

+ "timestamp": 1595931762204

+ },

+ {

+ "file_id": "1v1GvTkEFjMH_1c-bdS7JzNS70u9RUEHV",

+ "timestamp": 1578964243645

+ },

+ {

+ "file_id": "1SplqILjJr_ZqXcIUkNIk0tSbthfhYm07",

+ "timestamp": 1572044421118

+ },

+ {

+ "file_id": "intro.ipynb",

+ "timestamp": 1571858674399

+ },

+ {

+ "file_id": "1sF8QbqJ19ZU6oy5z4GUTt4lgUCjqO6kt",

+ "timestamp": 1569980697572

+ },

+ {

+ "file_id": "1EH76AWQ_pvT4i8ZXfkv-SCV4MrmllEl5",

+ "timestamp": 1563927451951

+ }

+ ]

+ },

+ "kernelspec": {

+ "display_name": "Python 3 (ipykernel)",

+ "language": "python",

+ "name": "python3"

+ }

+ },

+ "nbformat": 4,

+ "nbformat_minor": 0

+}

diff --git a/resources/examples/ipynb/Example-1-Trax-Data.ipynb b/resources/examples/ipynb/Example-1-Trax-Data.ipynb

new file mode 100644

index 000000000..3344c3829

--- /dev/null

+++ b/resources/examples/ipynb/Example-1-Trax-Data.ipynb

@@ -0,0 +1,732 @@

+{

+ "cells": [

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "colab_type": "text",

+ "id": "view-in-github"

+ },

+ "source": [

+ " "

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "id": "6NWA5uxOmBVz"

+ },

+ "outputs": [],

+ "source": [

+ "#@title\n",

+ "# Copyright 2020 Google LLC.\n",

+ "\n",

+ "# Licensed under the Apache License, Version 2.0 (the \"License\");\n",

+ "# you may not use this file except in compliance with the License.\n",

+ "# You may obtain a copy of the License at\n",

+ "\n",

+ "# https://www.apache.org/licenses/LICENSE-2.0\n",

+ "\n",

+ "# Unless required by applicable law or agreed to in writing, software\n",

+ "# distributed under the License is distributed on an \"AS IS\" BASIS,\n",

+ "# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n",

+ "# See the License for the specific language governing permissions and\n",

+ "# limitations under the License."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "zOPgYEe2i7Cg"

+ },

+ "source": [

+ "Notebook Author: [@SauravMaheshkar](https://github.com/SauravMaheshkar)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "jtMr8yxvM2m3"

+ },

+ "source": [

+ "# Introduction"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "id": "yD3A2vRGSDwy"

+ },

+ "outputs": [],

+ "source": [

+ "import os\n",

+ "import sys"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# For example, if trax is inside a 'src' directory\n",

+ "project_root = os.environ.get('TRAX_PROJECT_ROOT', '')\n",

+ "sys.path.insert(0, project_root)\n",

+ "\n",

+ "# Option to verify the import path\n",

+ "print(f\"Python will look for packages in: {sys.path[0]}\")\n",

+ "\n",

+ "# Import trax\n",

+ "import trax\n",

+ "\n",

+ "# Verify the source of the imported package\n",

+ "print(f\"Imported trax from: {trax.__file__}\")"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "v5VsWct1QjPz"

+ },

+ "source": [

+ "# Serial Fn"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "gEa5pT6FQuta"

+ },

+ "source": [

+ "In Trax, we use combinators to build input pipelines, much like building deep learning models. The `Serial` combinator applies layers serially using function composition and uses stack semantics to manage data.\n",

+ "\n",

+ "Trax has the following definition for a `Serial` combinator.\n",

+ "\n",

+ "> ```\n",

+ "def Serial(*fns):\n",

+ " def composed_fns(generator=None):\n",

+ " for f in fastmath.tree_flatten(fns):\n",

+ " generator = f(generator)\n",

+ " return generator\n",

+ " return composed_fns\n",

+ " ```\n",

+ "\n",

+ "The `Serial` function has the following structure:\n",

+ "\n",

+ "* It takes as **input** arbitrary number of functions\n",

+ "* Convert the structure into lists\n",

+ "* Iterate through the list and apply the functions Serially\n",

+ "\n",

+ "---\n",

+ "\n",

+ "The [`fastmath.tree_flatten()`](https://github.com/google/trax/blob/c38a5b1e4c5cfe13d156b3fc0bfdb83554c8f799/trax/fastmath/numpy.py#L195) function, takes a tree as a input and returns a flattened list. This way we can use various generator functions like Tokenize and Shuffle, and apply them serially by '*iterating*' through the list.\n",

+ "\n",

+ "Initially, we've defined `generator` to `None`. Thus, in the first iteration we have no input and thus the first step executes the first function in our tree structure. In the next iteration, the `generator` variable is updated to be the output of the next function in the list.\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "1rkCvxscXtvk"

+ },

+ "source": [

+ "# Log Function"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "oodQFyHDYJHF"

+ },

+ "source": [

+ "> ```\n",

+ "def Log(n_steps_per_example=1, only_shapes=True):\n",

+ " def log(stream):\n",

+ " counter = 0\n",

+ " for example in stream:\n",

+ " item_to_log = example\n",

+ " if only_shapes:\n",

+ " item_to_log = fastmath.nested_map(shapes.signature, example)\n",

+ " if counter % n_steps_per_example == 0:\n",

+ " logging.info(str(item_to_log))\n",

+ " print(item_to_log)\n",

+ " counter += 1\n",

+ " yield example\n",

+ " return log\n",

+ "\n",

+ "Every Deep Learning Framework needs to have a logging component for efficient debugging.\n",

+ "\n",

+ "`trax.data.Log` generator uses the `absl` package for logging. It uses a [`fastmath.nested_map`](https://github.com/google/trax/blob/c38a5b1e4c5cfe13d156b3fc0bfdb83554c8f799/trax/fastmath/numpy.py#L80) function that maps a certain function recursively inside a object. In the case depicted below, the function maps the `shapes.signature` recursively inside the input stream, thus giving us the shapes of the various objects in our stream.\n",

+ "\n",

+ "--\n",

+ "\n",

+ "The following two cells show the difference between when we set the `only_shapes` variable to `False`"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "from trax.data.preprocessing import inputs as preprocessing\n",

+ "from trax.data.encoder import encoder\n",

+ "from trax.data.loader.tf import base as dataset\n",

+ "\n",

+ "data_pipeline = preprocessing.Serial(\n",

+ " dataset.TFDS('imdb_reviews', keys=('text', 'label'), train=True),\n",

+ " encoder.Tokenize(vocab_dir='gs://trax-ml/vocabs/', vocab_file='en_8k.subword', keys=[0]),\n",

+ " preprocessing.Log(only_shapes=False)\n",

+ ")\n",

+ "example = data_pipeline()\n",

+ "print(next(example))"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "Wy8L-e9qcRY4"

+ },

+ "source": [

+ "# Shuffling our datasets"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "-cfg48KgcrlM"

+ },

+ "source": [

+ "Trax offers two generator functions to add shuffle functionality in our input pipelines.\n",

+ "\n",

+ "1. The `shuffle` function shuffles a given stream\n",

+ "2. The `Shuffle` function returns a shuffle function instead"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "4iD21oiycWf4"

+ },

+ "source": [

+ "## `shuffle`"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "bVgN1yYAcaKM"

+ },

+ "source": [

+ "> ```\n",

+ "def shuffle(samples, queue_size):\n",

+ " if queue_size < 1:\n",

+ " raise ValueError(f'Arg queue_size ({queue_size}) is less than 1.')\n",

+ " if queue_size == 1:\n",

+ " logging.warning('Queue size of 1 results in no shuffling.')\n",

+ " queue = []\n",

+ " try:\n",

+ " queue.append(next(samples))\n",

+ " i = np.random.randint(queue_size)\n",

+ " yield queue[i]\n",

+ " queue[i] = sample\n",

+ " except StopIteration:\n",

+ " logging.warning(\n",

+ " 'Not enough samples (%d) to fill initial queue (size %d).',\n",

+ " len(queue), queue_size)\n",

+ " np.random.shuffle(queue)\n",

+ " for sample in queue:\n",

+ " yield sample\n",

+ "\n",

+ "\n",

+ "The `shuffle` function takes two inputs, the data stream and the queue size (minimum number of samples within which the shuffling takes place). Apart from the usual warnings, for negative and unity queue sizes, this generator function shuffles the given stream using [`np.random.randint()`](https://docs.python.org/3/library/random.html#random.randint) by randomly picks out integers using the `queue_size` as a range and then shuffle this new stream again using the [`np.random.shuffle()`](https://docs.python.org/3/library/random.html#random.shuffle)"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "sentence = [\n",

+ " 'Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Nemo enim ipsam voluptatem quia voluptas sit aspernatur aut odit aut fugit, sed quia consequuntur magni dolores eos qui ratione voluptatem sequi nesciunt. Neque porro quisquam est, qui dolorem ipsum quia dolor sit amet, consectetur, adipisci velit, sed quia non numquam eius modi tempora incidunt ut labore et dolore magnam aliquam quaerat voluptatem. Ut enim ad minima veniam, quis nostrum exercitationem ullam corporis suscipit laboriosam, nisi ut aliquid ex ea commodi consequatur? Quis autem vel eum iure reprehenderit qui in ea voluptate velit esse quam nihil molestiae consequatur, vel illum qui dolorem eum fugiat quo voluptas nulla pariatur?',\n",

+ " 'But I must explain to you how all this mistaken idea of denouncing pleasure and praising pain was born and I will give you a complete account of the system, and expound the actual teachings of the great explorer of the truth, the master-loader of human happiness. No one rejects, dislikes, or avoids pleasure itself, because it is pleasure, but because those who do not know how to pursue pleasure rationally encounter consequences that are extremely painful. Nor again is there anyone who loves or pursues or desires to obtain pain of itself, because it is pain, but because occasionally circumstances occur in which toil and pain can procure him some great pleasure. To take a trivial example, which of us ever undertakes laborious physical exercise, except to obtain some advantage from it? But who has any right to find fault with a man who chooses to enjoy a pleasure that has no annoying consequences, or one who avoids a pain that produces no resultant pleasure?',\n",

+ " 'Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur. Excepteur sint occaecat cupidatat non proident, sunt in culpa qui officia deserunt mollit anim id est laborum',\n",

+ " 'At vero eos et accusamus et iusto odio dignissimos ducimus qui blanditiis praesentium voluptatum deleniti atque corrupti quos dolores et quas molestias excepturi sint occaecati cupiditate non provident, similique sunt in culpa qui officia deserunt mollitia animi, id est laborum et dolorum fuga. Et harum quidem rerum facilis est et expedita distinctio. Nam libero tempore, cum soluta nobis est eligendi optio cumque nihil impedit quo minus id quod maxime placeat facere possimus, omnis voluptas assumenda est, omnis dolor repellendus. Temporibus autem quibusdam et aut officiis debitis aut rerum necessitatibus saepe eveniet ut et voluptates repudiandae sint et molestiae non recusandae. Itaque earum rerum hic tenetur a sapiente delectus, ut aut reiciendis voluptatibus maiores alias consequatur aut perferendis doloribus asperiores repellat.']\n",

+ "\n",

+ "\n",

+ "def sample_generator(x):\n",

+ " for i in x:\n",

+ " yield i\n",

+ "\n",

+ "\n",

+ "example_shuffle = list(preprocessing.shuffle(sample_generator(sentence), queue_size=2))\n",

+ "example_shuffle"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "k-kTDkF-e7Vn"

+ },

+ "source": [

+ "## `Shuffle`"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "I5Djvqw2e9Jg"

+ },

+ "source": [

+ "> ```\n",

+ "def Shuffle(queue_size=1024):\n",

+ " return lambda g: shuffle(g, queue_size)\n",

+ "\n",

+ "This function returns the aforementioned `shuffle` function and is mostly used in input pipelines.\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "AA-Z4Sipkq98"

+ },

+ "source": [

+ "# Batch Generators"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "yzwONDulksbd"

+ },

+ "source": [

+ "## `batch`"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "-DCABkndkudF"

+ },

+ "source": [

+ "This function, creates batches for the input generator function.\n",

+ "\n",

+ "> ```\n",

+ "def batch(generator, batch_size):\n",

+ " if batch_size <= 0:\n",

+ " raise ValueError(f'Batch size must be positive, but is {batch_size}.')\n",

+ " buf = []\n",

+ " for example in generator:\n",

+ " buf.append(example)\n",

+ " if len(buf) == batch_size:\n",

+ " batched_example = tuple(np.stack(x) for x in zip(*buf))\n",

+ " yield batched_example\n",

+ " buf = []\n",

+ "\n",

+ "It keeps adding objects from the generator into a list until the size becomes equal to the `batch_size` and then creates batches using the `np.stack()` function.\n",

+ "\n",

+ "It also raises an error for non-positive batch_sizes.\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "BZMKY6VUpD3M"

+ },

+ "source": [

+ "## `Batch`"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "g6pYJHgOpIG4"

+ },

+ "source": [

+ "> ```\n",

+ " def Batch(batch_size):\n",

+ " return lambda g: batch(g, batch_size)\n",

+ "\n",

+ "This Function returns the aforementioned `batch` function with given batch size."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "cmQzaXw9vrbW"

+ },

+ "source": [

+ "# Pad to Maximum Dimensions"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "iL3MuKQIvt-Q"

+ },

+ "source": [

+ "This function is used to pad a tuple of tensors to a joint dimension and return their batch.\n",

+ "\n",

+ "For example, in this case a pair of tensors (1,2) and ( (3,4) , (5,6) ) is changed to (1,2,0) and ( (3,4) , (5,6) , 0)"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/",

+ "height": 51

+ },

+ "id": "lvbBDuq4p4qW",

+ "outputId": "ed69c541-3219-4a23-cf73-4568e3e2882f"

+ },

+ "outputs": [],

+ "source": [

+ "import numpy as np\n",

+ "from trax.data.preprocessing import inputs as preprocessing\n",

+ "\n",

+ "tensors = (np.array([(1., 2.)]), np.array([(3., 4.), (5., 6.)]))\n",

+ "print(type(tensors[0]))\n",

+ "padded_tensors = preprocessing.pad_to_max_dims(tensors=tensors, boundary=3)\n",

+ "padded_tensors"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "PDQQYCdLOkl1"

+ },

+ "source": [

+ "# Creating Buckets"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "RjGD3YKJWj58"

+ },

+ "source": [

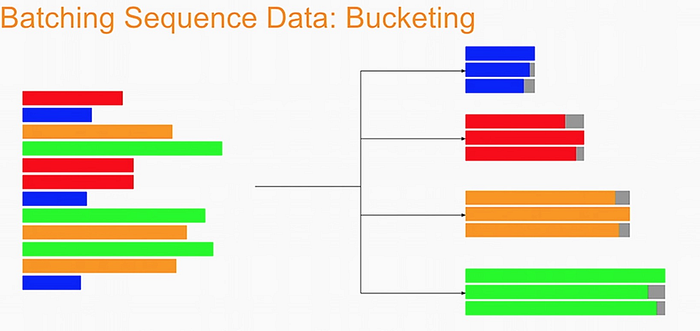

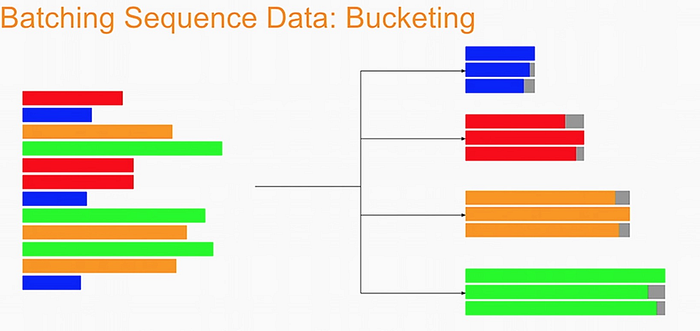

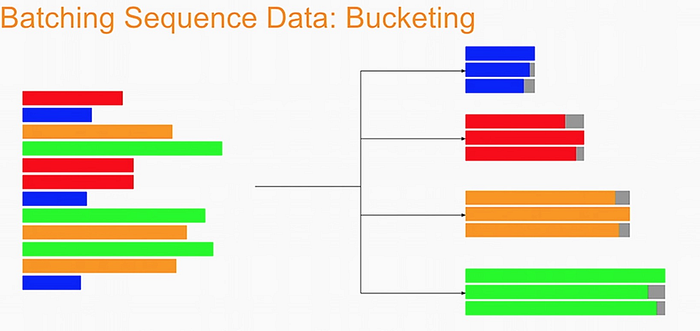

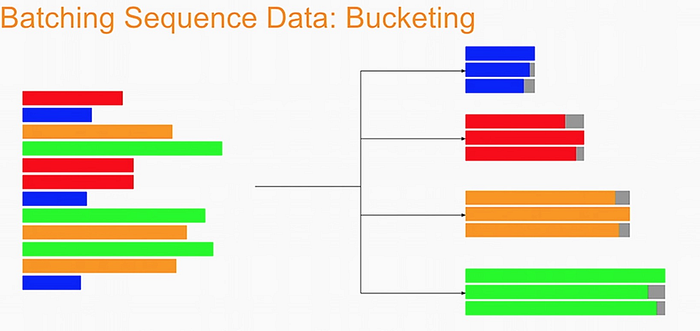

+ "For training Recurrent Neural Networks, with large vocabulary a method called Bucketing is usually applied.\n",

+ "\n",

+ "The usual technique of using padding ensures that all occurences within a mini-batch are of the same length. But this reduces the inter-batch variability and intuitively puts similar sentences into the same batch therefore, reducing the overall robustness of the system.\n",

+ "\n",

+ "Thus, we use Bucketing where multiple buckets are created depending on the length of the sentences and these occurences are assigned to buckets on the basis of which bucket corresponds to it's length. We need to ensure that the bucket sizes are large for adding some variablity to the system."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "17z3ASA-OrSF"

+ },

+ "source": [

+ "## `bucket_by_length`\n",

+ "\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "rf5trhANYpy5"

+ },

+ "source": [

+ "> ```\n",

+ "def bucket_by_length(generator, length_fn, boundaries, batch_sizes,strict_pad_on_len=False):\n",

+ " buckets = [[] for _ in range(len(batch_sizes))]\n",

+ " boundaries = boundaries + [math.inf]\n",

+ " for example in generator:\n",

+ " length = length_fn(example)\n",

+ " bucket_idx = min([i for i, b in enumerate(boundaries) if length <= b])\n",

+ " buckets[bucket_idx].append(example)\n",

+ " if len(buckets[bucket_idx]) == batch_sizes[bucket_idx]:\n",

+ " batched = zip(*buckets[bucket_idx])\n",

+ " boundary = boundaries[bucket_idx]\n",

+ " boundary = None if boundary == math.inf else boundary\n",

+ " padded_batch = tuple(\n",

+ " pad_to_max_dims(x, boundary, strict_pad_on_len) for x in batched)\n",

+ " yield padded_batch\n",

+ " buckets[bucket_idx] = []\n",

+ "\n",

+ "---\n",

+ "\n",

+ "This function can be summarised as:\n",

+ "\n",

+ "* Create buckets as per the lengths given in the `batch_sizes` array\n",

+ "\n",

+ "* Assign sentences into buckets if their length matches the bucket size\n",

+ "\n",

+ "* If padding is required, we use the `pad_to_max_dims` function\n",

+ "\n",

+ "---\n",

+ "\n",

+ "### Parameters\n",

+ "\n",

+ "1. **generator:** The input generator function\n",

+ "2. **length_fn:** A custom length function for determing the length of functions, not necessarily `len()`\n",

+ "3. **boundaries:** A python list containing corresponding bucket boundaries\n",

+ "4. **batch_sizes:** A python list containing batch sizes\n",

+ "5. **strict_pad_on_len:** – A python boolean variable (`True` or `False`). If set to true then the function pads on the length dimension, where dim[0] is strictly a multiple of boundary.\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "c0uQZaaPVyF_"

+ },

+ "source": [

+ "## `BucketByLength`"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "Qhh21q71aX3l"

+ },

+ "source": [

+ "> ```\n",

+ "def BucketByLength(boundaries, batch_sizes,length_keys=None, length_axis=0, strict_pad_on_len=False):\n",

+ " length_keys = length_keys or [0, 1]\n",

+ " length_fn = lambda x: _length_fn(x, length_axis, length_keys)\n",